Share this post

Flash-forward: Storytell Just Got Even More Powerful with Google’s Gemini 2.0

February 6, 2025

.avif)

Fresh off integrating OpenAI’s o3-Mini, we are now harnessing the power of Google’s Gemini 2.0 Flash—just hours after its release. Like a bolt of AI innovation, this upgrade supercharges Storytell, making every response sharper, faster, and more precise.

Why it matters

Google’s Gemini 2.0 Flash is built for speed and efficiency, making it perfect for AI-driven applications that demand quick, intelligent responses. Here are some key use cases where Gemini 2.0 Flash excels:

- Content generation at scale – Whether drafting long-form articles, refining product descriptions, or summarizing large documents, Gemini 2.0 Flash provides high-speed, context-aware responses.

- Real-time data analysis – Quickly process and interpret vast amounts of structured and unstructured data, making it valuable for research and reporting.

- Conversational AI – Enhance chatbot interactions and virtual assistants with more coherent, responsive, and contextually rich dialogue.

- Multimodal AI tasks – With its large token window, it can process diverse inputs, from text to code, images, and structured data, making it suitable for cross-functional AI applications.

Its 1 million token context window ensures context-rich responses for longer, more complex queries, making it a game-changer for AI-assisted workflows. This integration enhances response quality while ensuring efficient performance.

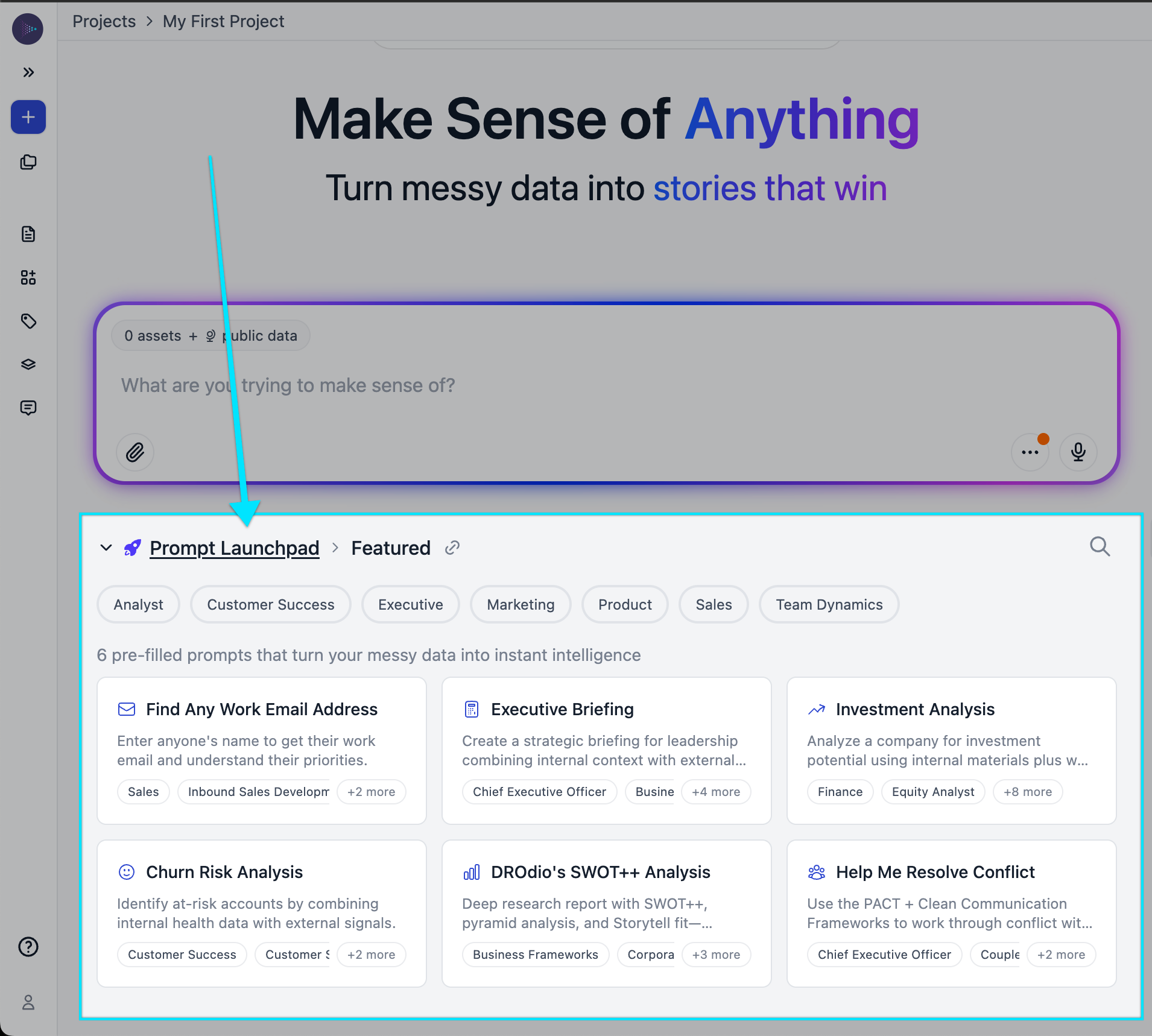

How to use Gemini 2.0 Flash in Storytell

Our LLM Router automatically selects the best AI model for your queries, balancing speed, accuracy, and efficiency. However, if you want to experience Gemini 2.0 Flash directly, you can easily override the router. Here’s how:

1. Open Storytell and navigate to the prompt field.

2. Enter the override command: Simply type Use Gemini Flash for your answer within your prompt.

3. Submit your query and see how Gemini 2.0 Flash handles your request.

4. Compare responses by running the same prompt with other models to find the best fit for your needs.

With this flexibility, you can take advantage of Gemini 2.0 Flash’s ultra-fast, context-rich responses for content generation, real-time analysis, or advanced AI-powered workflows. Whether you’re drafting content, analyzing data, or improving chatbot interactions, this simple override allows you to use the best model for your needs.

Learn more about Gemini 2.0 Flash here and check out our latest blog on OpenAI’s o3-Mini.

Gallery

No items found.

Changelogs

Here's what we rolled out this week

No items found.