Share this post

Faster, Sharper AI: Storytell Now Uses OpenAI’s o3-Mini

February 3, 2025

.avif)

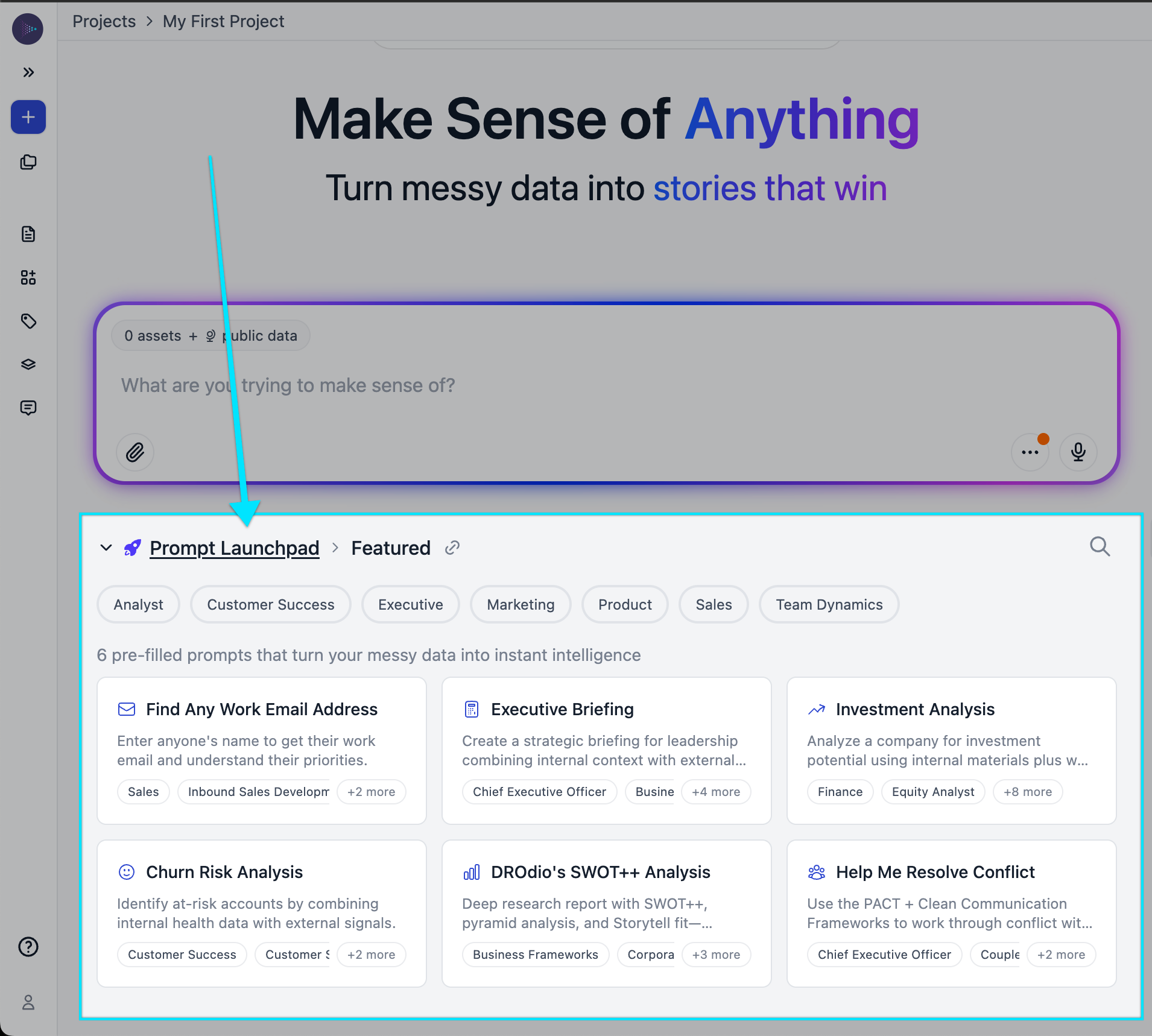

Today, we're happy to announce a significant enhancement that takes our platform to the next level: Storytell now integrates OpenAI’s o3-Mini, a next-generation language model built for speed, efficiency, and high-quality results. Our LLM router “automagically” selects o3-Mini when it’s the best fit for your query, delivering faster and more accurate responses with no extra effort from you.

What’s new?

OpenAI released o3-Mini on January 31, 2025, as part of its latest model lineup, bringing improvements in efficiency, speed, and accuracy. It generates high-quality responses with lower latency, making it ideal for real-time applications like chat-based interactions, summarization, and content creation. It also excels in science, math, and coding, offering strong STEM capabilities while maintaining the cost-effectiveness and reduced latency of OpenAI’s previous o1-Mini model. You can read OpenAI’s full announcement here.

How Storytell’s LLM router works

Our LLM router selects the best-performing model for each request in real time, based on efficiency, accuracy, and relevance. With o3-Mini, it now taps into OpenAI’s latest advancements to improve coherence and speed.

If o3-Mini is the best option for your query, the router automatically selects it. Learn more about how our router works here.

What you can expect

- Improved efficiency with faster, more accurate responses.

- Enhanced STEM capabilities for handling science, math, and coding queries.

- Seamless model selection to ensure queries are processed by the most effective LLM.

- Optimized AI performance to deliver relevant, high-quality content.

How different users benefit

For content creators & writers

If you use Storytell for brainstorming blog ideas, outlining articles, or refining your writing, you'll notice more precise suggestions and smoother flow with OpenAI’s o3-Mini. Whether you're drafting marketing copy or creative fiction, Storytell now delivers sharper results faster.

For marketing analysts & strategists

Need to generate high-quality, SEO-optimized FAQ content in minutes? With OpenAI’s o3-Mini, Storytell now produces cleaner, more relevant responses, helping you refine customer support documents and marketing copy without extra back-and-forth.

For data & AI teams using Storytell for STEM queries

Developers and data teams using Storytell for code generation and debugging will see improvements in accuracy and efficiency. o3-Mini delivers better Python, SQL, and mathematical problem-solving, helping you move from idea to execution faster.

For UX writers & product teams

Need microcopy that’s concise yet effective? With OpenAI’s o3-Mini, Storytell helps UX and product teams craft clearer, user-friendly messaging—ensuring every word enhances the experience without taking up too much space.

What's next?

We continue to refine our system to integrate the latest AI advancements and enhance Storytell’s capabilities. Try it today and experience the difference with OpenAI’s o3-Mini at Storytell.ai.

Gallery

No items found.

Changelogs

Here's what we rolled out this week

No items found.