Share this post

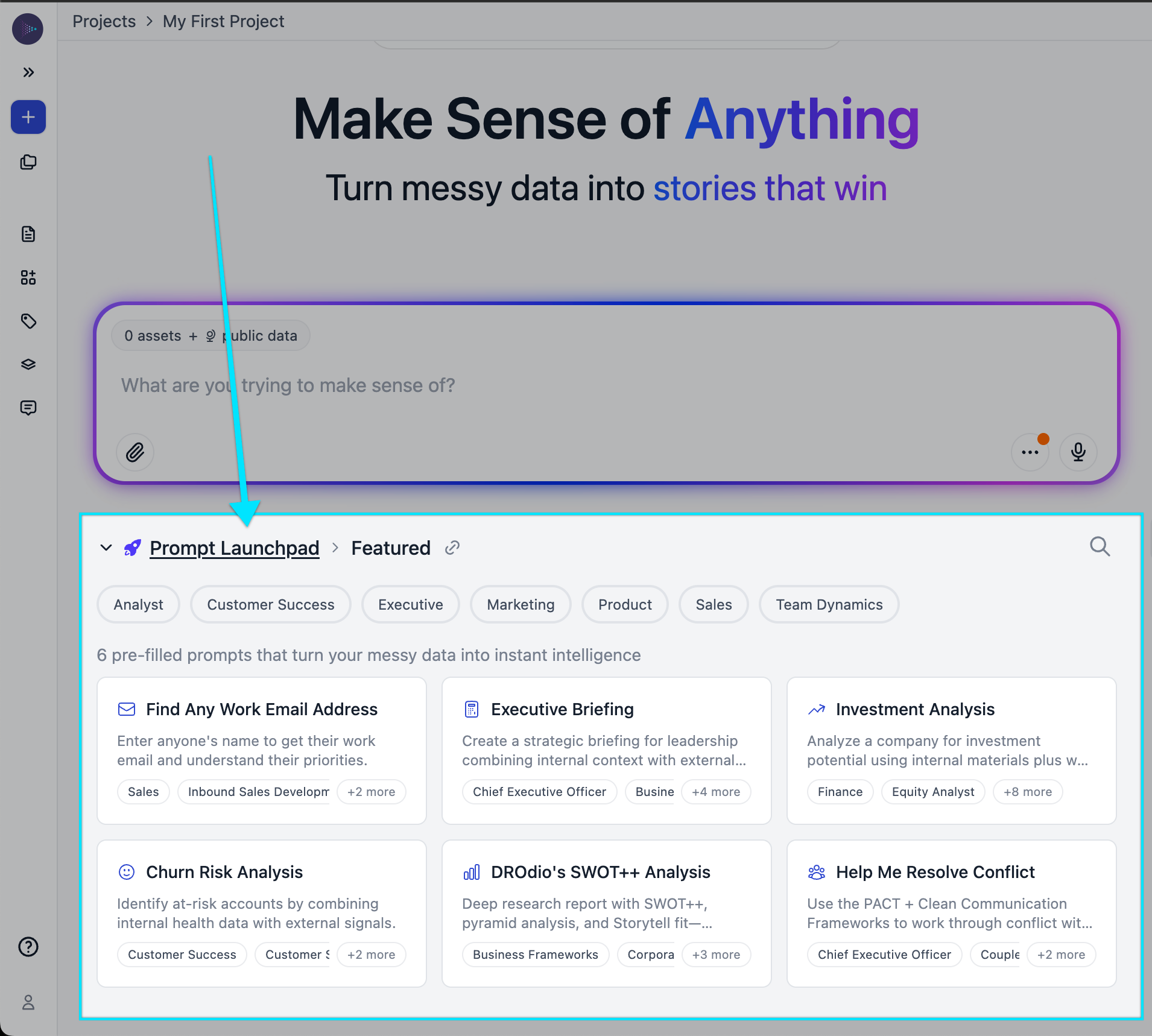

Working with CSVs: Our Original SmartChat™ vs. our new beta

An analysis of the relative capabilities of both platforms

August 12, 2024

Storytell works wonderfully on unstructured data. Our original SmartChat™ platform can handle as many files as you want to throw at it. The ability to upload voluminous PDF, PPT, DOCx, Zoom MP4, emails and other files, tag them, and then find hidden insights and patterns across them.

Things get trickier when you start to add XLS files (I’ll just call them CSVs in this post, since that’s the more broadly used export format).

CSVs are tricky for LLMs in multiple ways

CSVs are tricky for a number of reasons. Here are a few:

- Each CSV likely has a different schema:

- Compared to a database that you query with SQL and are structured in a standard format (i.e., the definition of each column in the database never changes), each CSV has its own structure (i.e., its own schema). Sometimes row 1 has labels for each column. Sometimes row 2 also contains sub-labels. Sometimes there are stacked blocks of data where multiple unrelated datasets are included in the same sheet, often separated by blank rows or header rows for each new block of data.

- Such a layout makes it very challenging for an LLM to properly parse and analyze the data, as additional steps are needed to separate and clean the individual datasets.

- The data can live far from the label:

- LLMs work best when they can calculate mathematical relationships on adjacent content (via embeddings, which are a specialized type of vector used to encode complex, semantic relationships from categorical data).

- But in CSVs, if you’ve got a value in cell

AA2347, the label that describes what that value is referring to might live in the first column, over atA2347, or up at the first row,AA1. Either way, the label that provides context to the value is very far from it, which wrecks havoc with LLMs.

- CSVs are very verbose and need large context windows:

- One way to handle CSVs is to turn them into other formats, including HTML, JSON and XML.

- But this output is very verbose, and when an LLM tries to parse the data, it gets overwhelmed with all the noise, which takes up much of the LLM’s available context window.

How to work around the issues with CSVs

Storytell’s engineers are hard at work making CSVs “just work” with our system. This is among the most progressive work being done in AI/ML today (as an example: A team of Microsoft researchers just released a paper in July titled “SpreadsheetLLM: Encoding Spreadsheets for Large Language Models”).

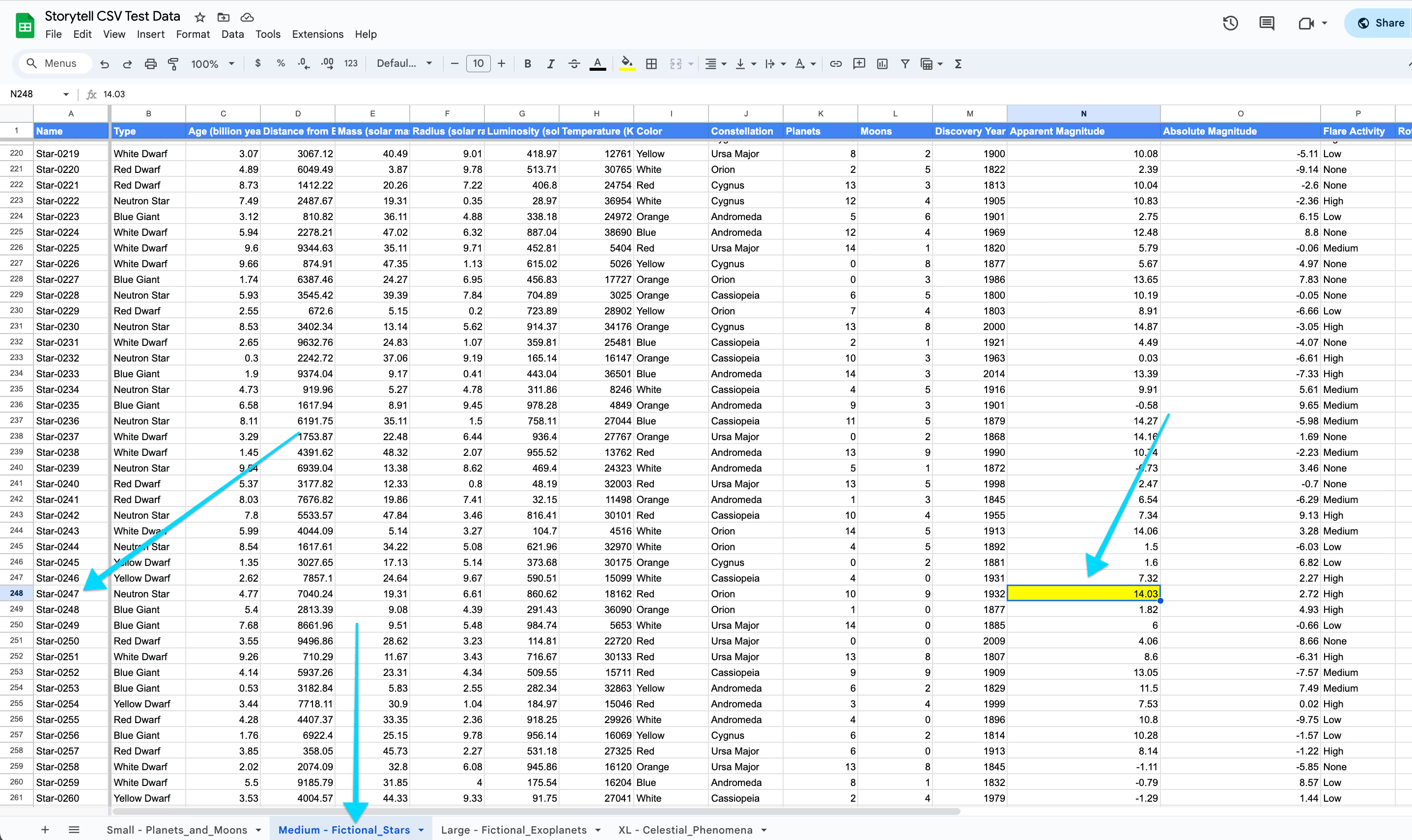

For the examples below, I’m using this test data set, which consists of:

- Small CSV (10 rows, 5 columns — 50 total data points)

- Medium CSV (500 rows, 20 columns - 10,000 data points)

- Medium Plus CSV (33k rows, 60 columns - 200,000 data points)

- Large CSV (5k rows, 100 columns — 500,000 data points)

- XL CSV (10k rows, 100 columns — 1 million data points)

In the meantime, here’s by better understanding the limitations of CSVs today, you can also find ways to get value from them with Storytell. Here are a few pro-tips:

CSVs: Using the original SmartChat™ in “Analytics Mode” vs. the new Storytell beta:

When our oroginal SmartChat™ detects structured data, it goes into an “analytics mode.” This means it’s spinning up a server to write a program that tries to answer your question — it’s writing custom code to try to give you a response.

Because of this, it’s slower than regular SmartChat™, and CSVs are limited to 20 megabytes in size. I’ve also found that SmartChat has a challenge with CSVs above 10,000 data points (althought the file may upload, you'll see an error that says "unable to generate embeddings". This is something we're working on improving).

Working with the Medium-sized file (10,000 data points), I’m going to ask Storytell a question about some data about midway through the data set — the “Apparent Magnitude” (column N) of Star 247 (row 248 out of 500). Here’s the actual answer:

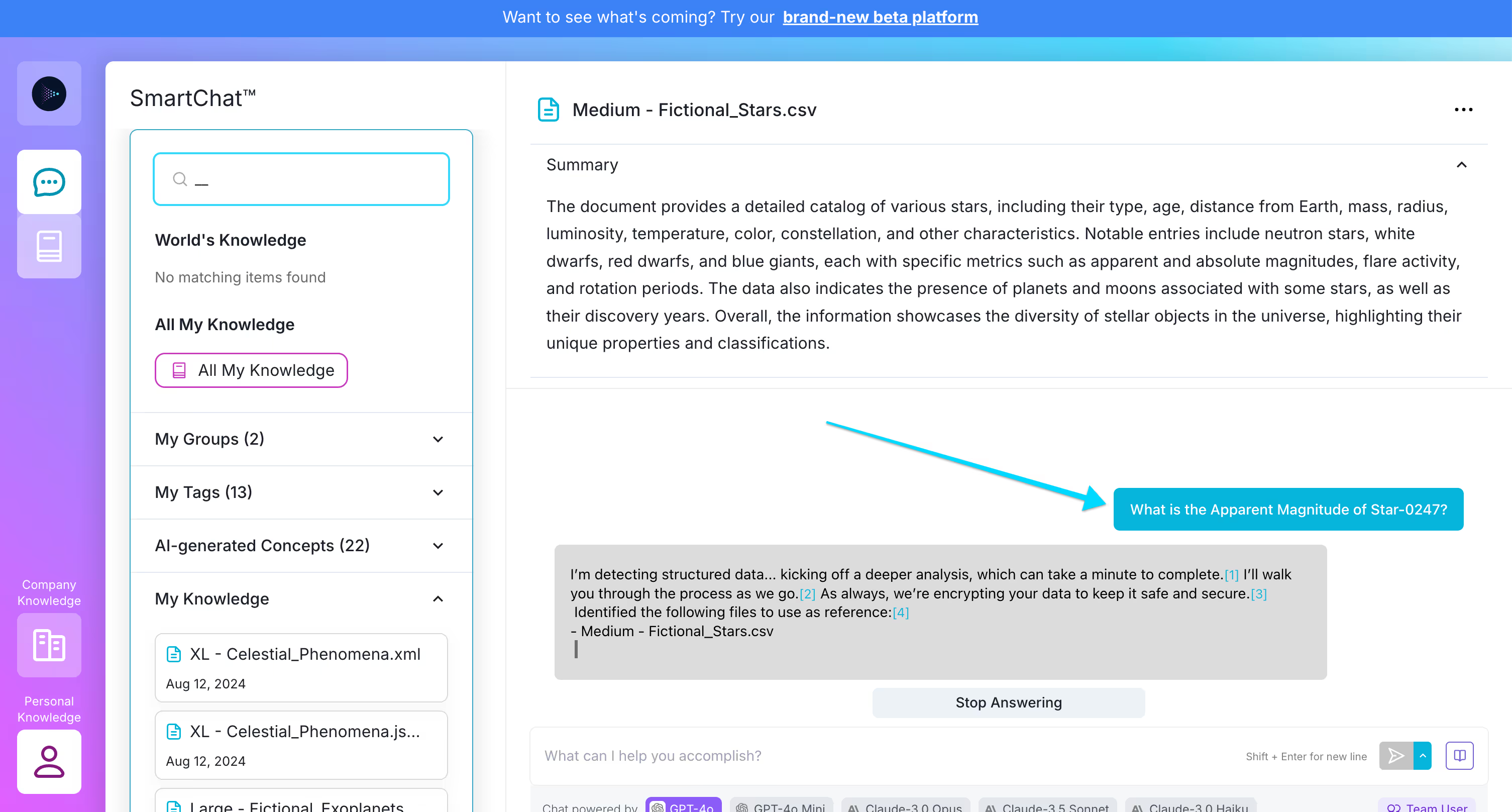

Here’s an example of me asking SmartChat™ this question:

And after about 30 seconds, you can see that Storytell provides the answer:

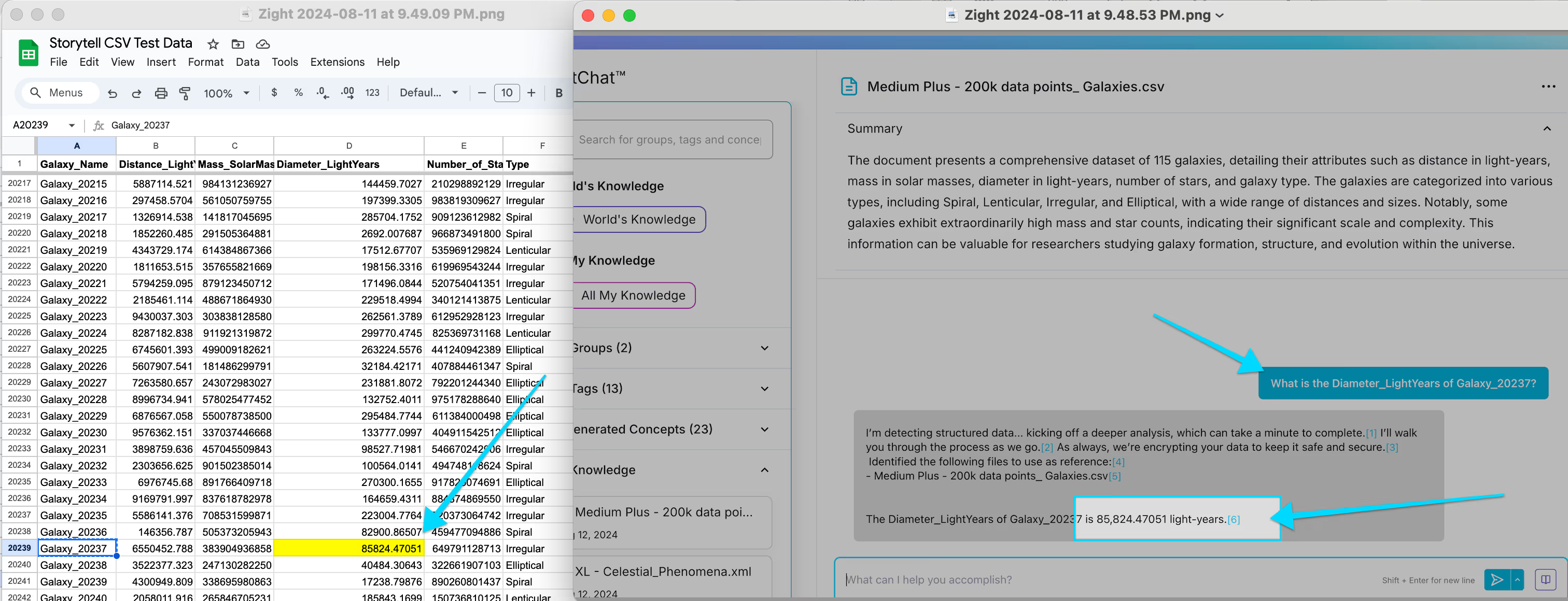

I also tried the same analysis with the "Medium Plus" CSV data set which contains 200,000 data points vs. 10,000. It was also able to answer:

The Original SmartChat™ can work with 200,000 data points in "Analytics Mode."

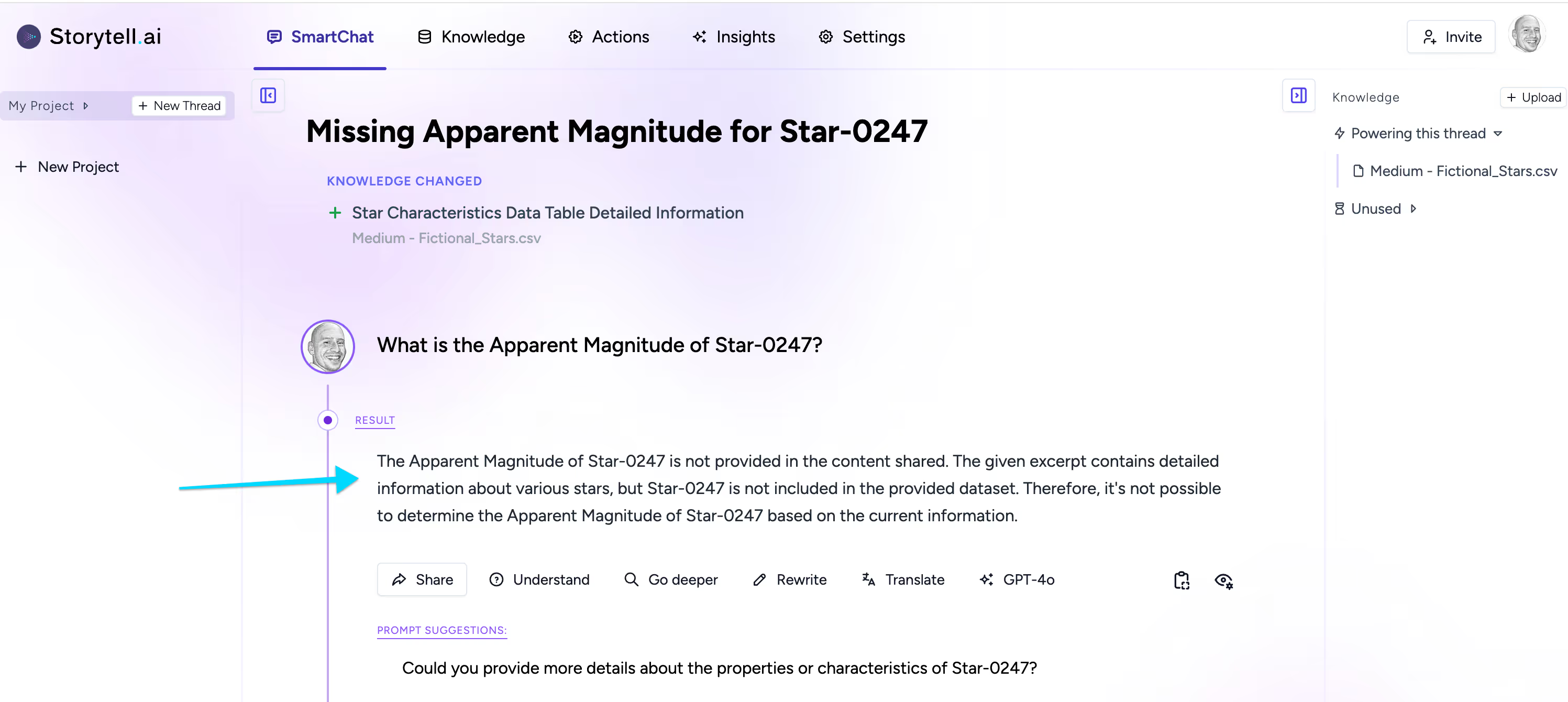

We are building a new Storytell beta platform which is much faster than the original SmartChat. However, it doesn’t got into the same “analytics” mode as the original. This means that it can’t yet give as accurate an answer larger file sizes due to context window limitations. For example, I asked the same question of the new platform and it didn’t have the answer:

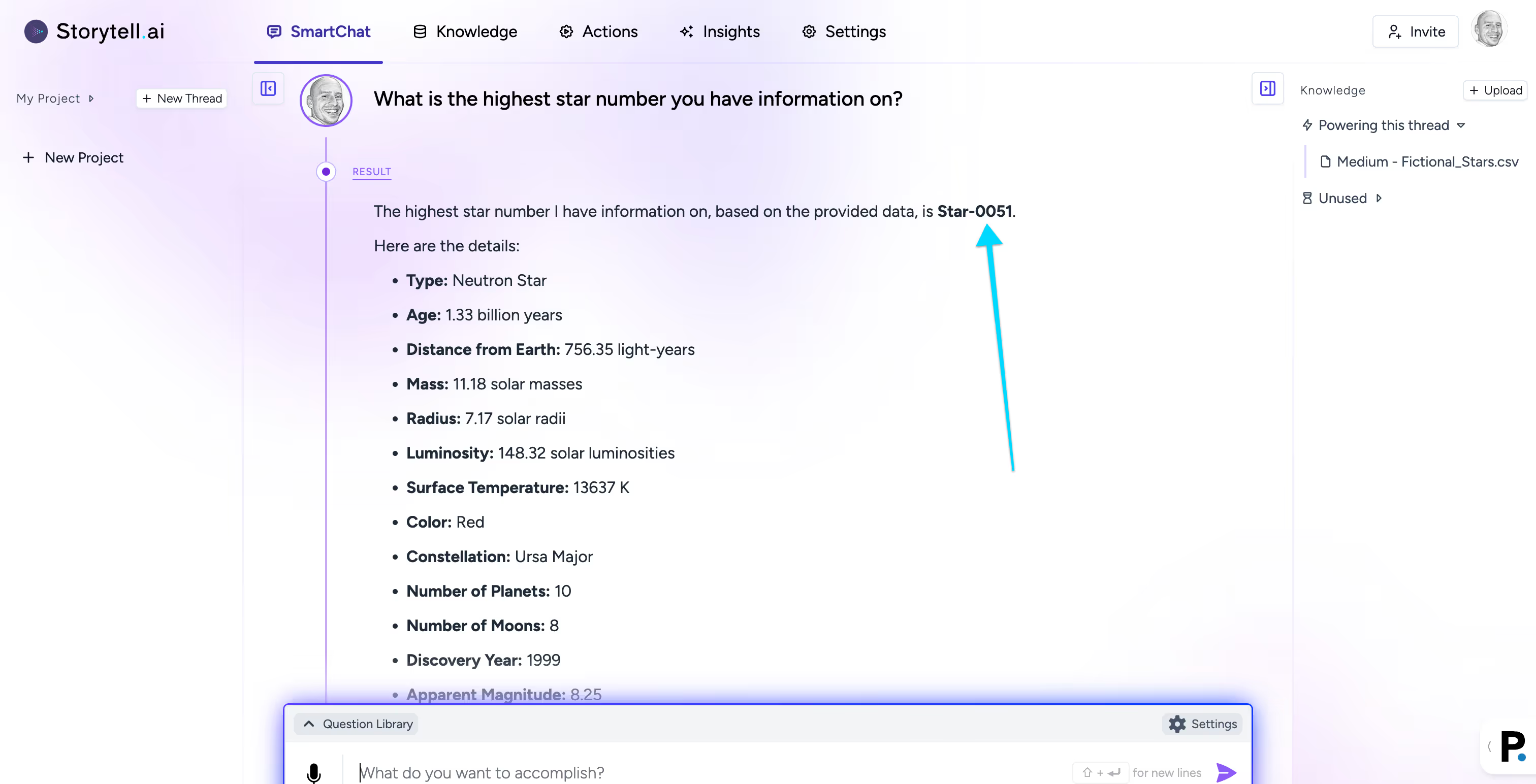

It only had data up to star #51 (10% of the total file — about 1,000 total data points), but it did answer quickly and accurately for the data it had:

Original vs. Beta: The original SmartChat will give you accurate answers on larger CSV data files in "Analytics Mode," but it’s slow. The new beta will give you fast answers, but it’s current limited to about 1,000 total data points.

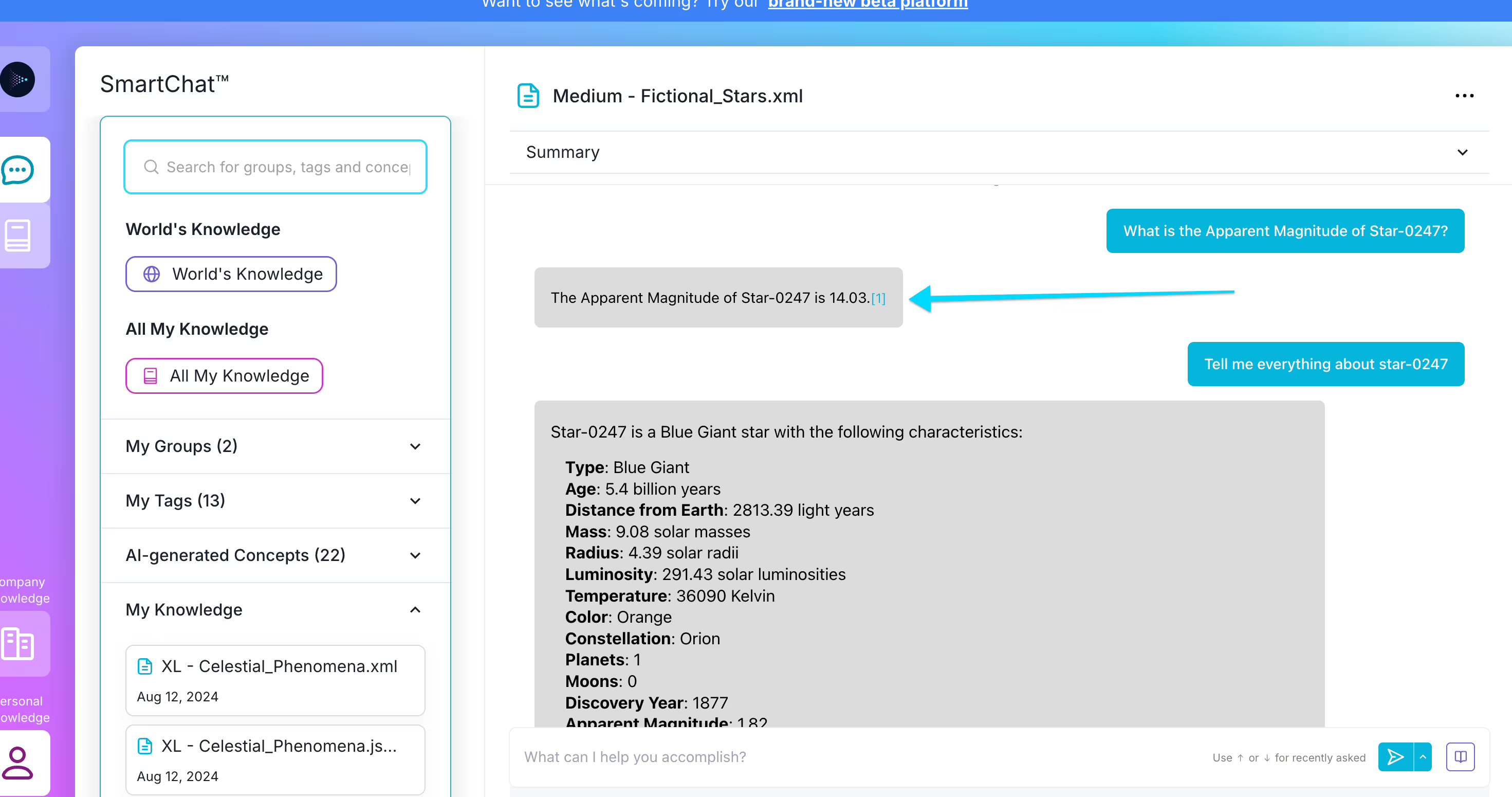

You may be able to get more mileage by converting your CSV to XML. For example, the original SmartChat™ answered the same question above much faster when asked to examine an XML file:

Converting CSV to XML is not consistently as accurate — when I asked the original SmartChat to “tell me everything” about start 0247, it said the star was a Blue Giant, which is actually the characteristic of the next row, start 0248.

Conclusion:

To get accurate answers at scale, you’ll need to use the original SmartChat in analytics mode. You can use the new Storytell beta for smaller data sets — it’s fast but not yet as robust. We’re working to improve that!

Gallery

No items found.

Changelogs

Here's what we rolled out this week

No items found.