Share this post

Get the best answer, every time

You'll always get the best answer from OpenAI, Claude, Gemini, Llama and more

November 14, 2024

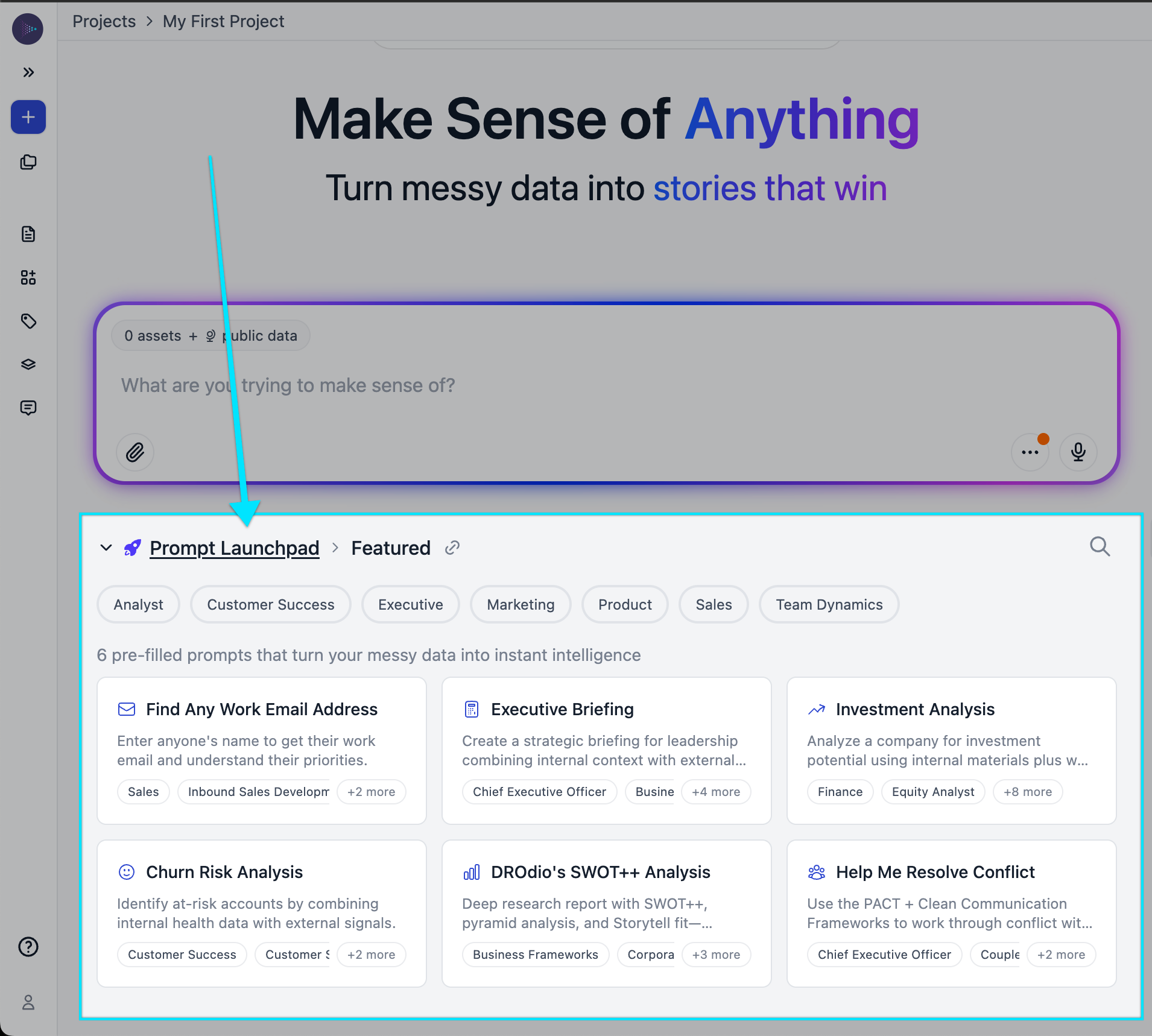

The Best Answer, Every Time

What if you could have confidence that the best Large Language Model would answer your question? Now you can, via our sophisticated LLM Router that will analyze each question you ask and select the best LLM to answer it.

Want to bring our Collaborative Intelligence Platform into your enterprise? Contact us here.

Since Storytell is built to be enterprise-grade, our LLM router allows for enterprise customers to "Bring your own LLM" to add to our LLM farm, and then set custom rules that allow for the following types of scenarios:

- Restrict sensitive queries from being answered by foundational models: Storytell is built to ensure that using AI inside the enterprise is safe and secure. Storytell is built with a robust multi-tenant structure, end-to-end encryption, and no LLMs are trained on your data -- even for free users. However, some enterprises want to go even further, ensuring that the most sensitive queries, which might contain non-public financial, customer, roadmap or other data, are answered by bespoke fine-tuned open-source LLMs specific to that enterprise. Our LLM router enables exactly this type of control out of the box, with the ability to create custom rule sets like: "Ensure any queries by the finance team on company data are routed to in-house LLMs."

- Prioritize for accuracy, speed and cost with granular controls: Our LLM router can prioritize, on a per-query basis, choosing the best LLM based on highest accuracy, fastest response speed, lowest cost, or a dynamic mixture of all three. Enterprise customers can optimize across these vectors based on the needs of each user, team or department.

How Storytell's LLM Router Works

Here's a video with Alex, Storytell's lead engineer on the LLM router, showing DROdio, our CEO, how the router works:

By default, Storytell's LLM router works with the following Large Language Models:

- OpenAI:

- GPT-4o

- GPT-4o Mini

- GPT o1-Preview Mini

- GPT o1-Preview (available to enterprise customers)

- Anthropic:

- Haiku

- Sonnet 3.5

- Opus (available to enterprise customers)

- Google:

- Gemini 1.5 Flash-8b

- Gemini 1.5 Flash

- Gemini 1.5 Pro

- Open Source:

- Llama 3.1 (available to enterprise customers)

- "Bring your own LLM" (available to enterprise customers)

Choosing the best LLM to answer your query

Our LLM router evaluates your query to determine what category it falls into. Available categories include:

- Reasoning & Knowledge: General queries that require the LLM to access company or world's knowledge and arrive at an answer

- Scientific Reasoning & Knowledge: Specific scientific queries that require the LLM to access company or world's knowledge and arrive at an answer

- Quantitative Reasoning: Queries that require the LLM to do math and computations

- Coding: Queries that require the LLM to write computer code

- Communication: Queries that require the LLM to respond in ways that communicate concepts effectively to a human, (like writing an effective email to your boss)

The LLM router will select the LLM with the highest benchmark score for the selected category while also considering costs and response time tradeoffs based on configurable preferences.

One of Storytell's product principles is speed -- the highest quality answer isn't helpful if it takes a long time for you to receive it. If there is an LLM that scores nearly as well as the highest quality scoring LLM, but is substantially faster, we will automatically prioritize the faster LLM to respond.

Cost optimization is another key factor in our LLM selection process, which enterprises can configure based on their needs. Building on our quality and speed analysis, Storytell's router will identify if there are more cost-effective options among the high-performing LLMs. When an alternative model delivers comparable quality and speed at a significantly lower cost, our system will select that option, ensuring you get optimal value without compromising on performance.

Seeing the router in action: Reporting and audit logs

Storytell provides robust enterprise reporting and audit logs showing the router in action. Here are some screenshots from an enterprise reporting dashboard:

You're still in control: Override the LLM router's selection

If you'd still like to have a specific LLM answer your query, you can override the LLM router's selection by specifying which LLM you want to have answer your query, right in your question. Here is an example to show you how it works:

Enterprise Customers: Tuning our LLM Router to your needs

Our enterprise customers can fine tune our LLM router to prioritize how the router works across any of these vectors -- even down to a query type or department, team or even individual user level. Enterprises can also bring your own LLMs to our LLM farm to add them into the router mix. Contact us to learn more.

Gallery

No items found.

Changelogs

Here's what we rolled out this week

No items found.