Share this post

New in Storytell: Improved data views and a smarter LLM-powered chat

September 19, 2025

During this week's engineering demo, the engineering team shared updates on significant improvements to the concept graph and data view filtering system and a major upgrade to Storytell's chat functionality, including a rebuilt integration with leading large language models (LLMs). These enhancements deliver critical benefits for power users managing complex datasets and introduce a more transparent, responsive chat experience.

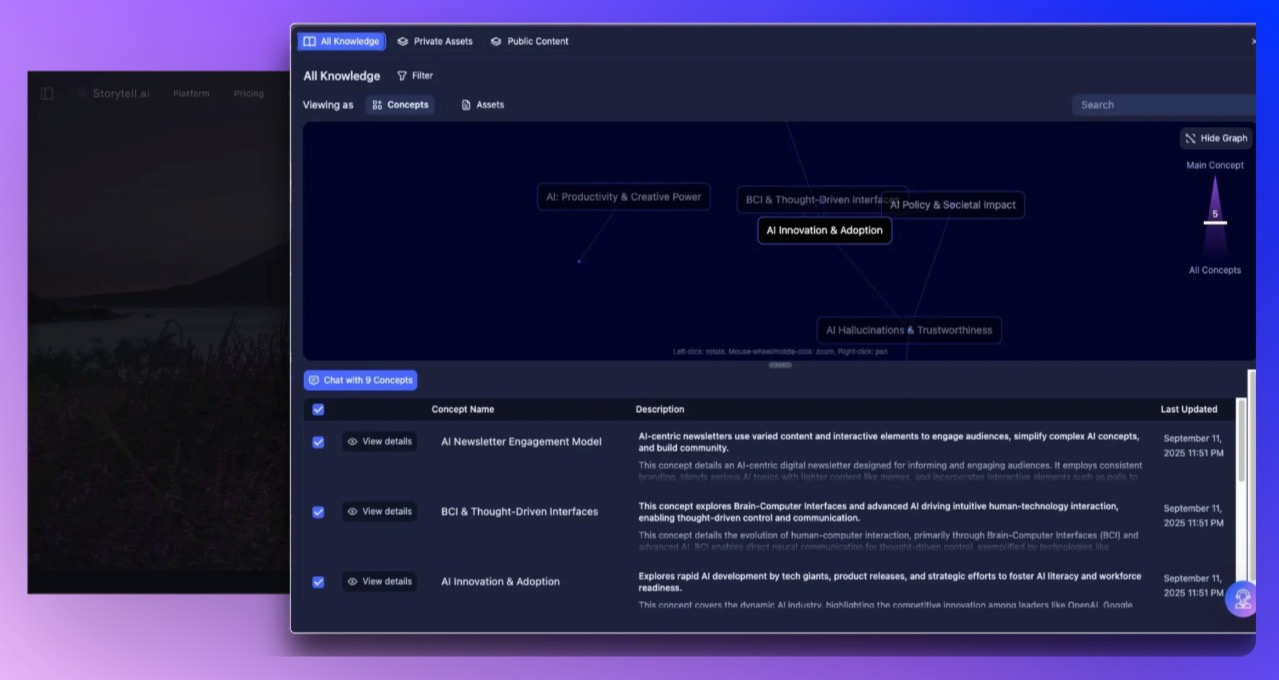

Concept graph now features flexible filtering and data views

The concept graph has been enhanced to offer users greater control and clarity when working with large sets of assets. Recent improvements include a new light mode for the interface, making elements easier to read and navigate. As you adjust the slider between high-level and detailed views, the data table updates dynamically, ensuring a unified and visually intuitive experience. Graph clarity is further improved by fading out background nodes and ensuring key nodes are highlighted with a consistent background, making relationships easier to interpret at a glance.

Streamlined, powerful filtering system

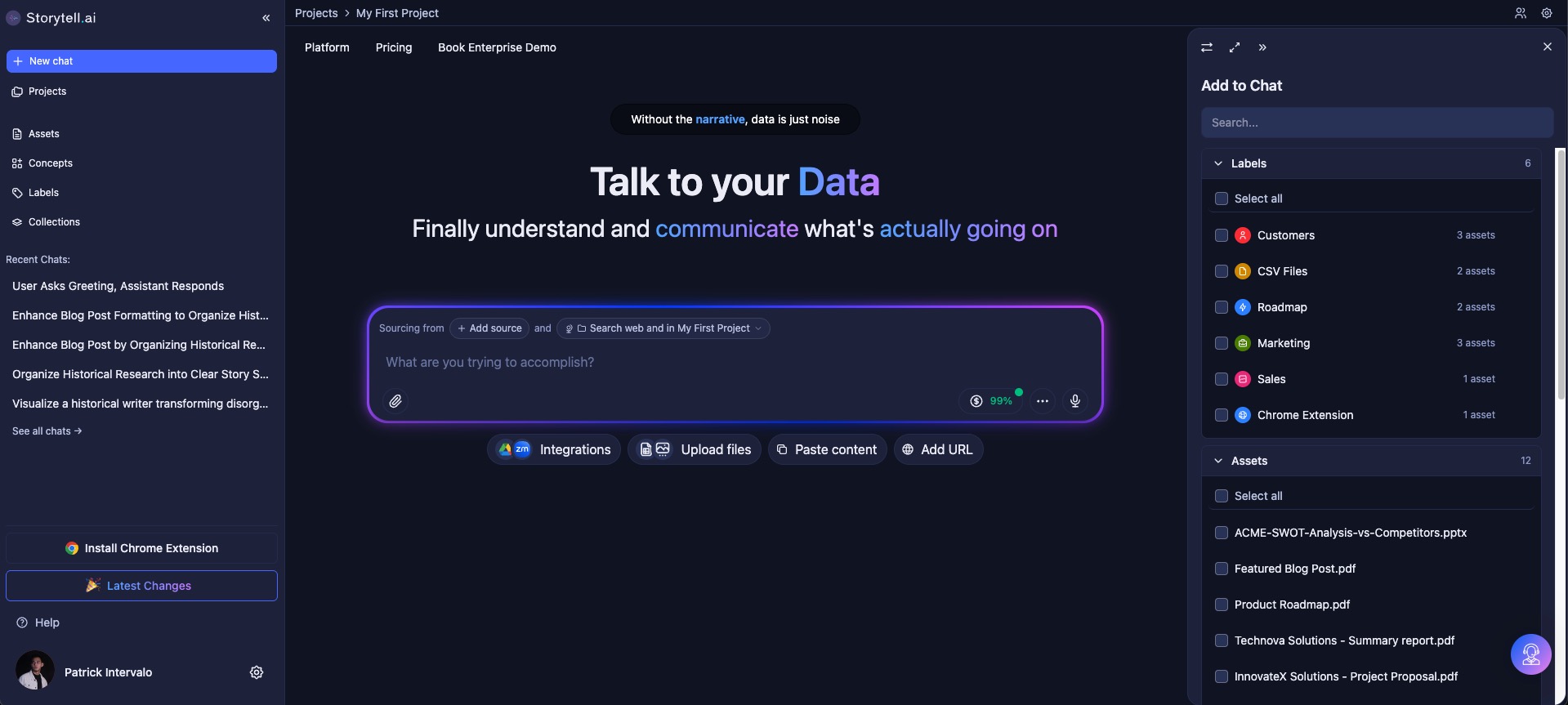

The revamped filtering experience is designed to handle advanced scenarios without the need for complex workarounds. Storytell now allows users to select and apply multiple filter labels simultaneously. With a single toggle button, filters can be combined using OR (any label matches) or AND (all labels must match) logic, streamlining the process of constructing specific queries. These improvements replace the previous more rigid filtering setup and make it much easier to home in on exactly the data you need.

When working with multiple filters, the new interface introduces an additional control that lets users define whether an asset must match any filter or all filters, making the system more adaptable to various workflows. Clear, natural language explanations accompany these choices, so intent is always transparent. For example: "include any of these two labels" versus "include only assets matching all selected labels" — empowering users to create both broad and narrowly tailored asset sets.

Exclusion filters for data view filters

Responding to requests from power users like grant, exclusion ("NOT") filters have been added. This lets users exclude assets with certain labels either if they match any of the exclusion labels or only if all exclusion labels are applied. For example, a user can filter for all assets excluding those related to "customer," instantly removing irrelevant content from view. Combining inclusion and exclusion filters enables highly specific searches—such as assets that mention "McDonald’s" and "ad fraud," but not "mobile data." This ensures that users can focus on exactly the subset of data needed for the task at hand.

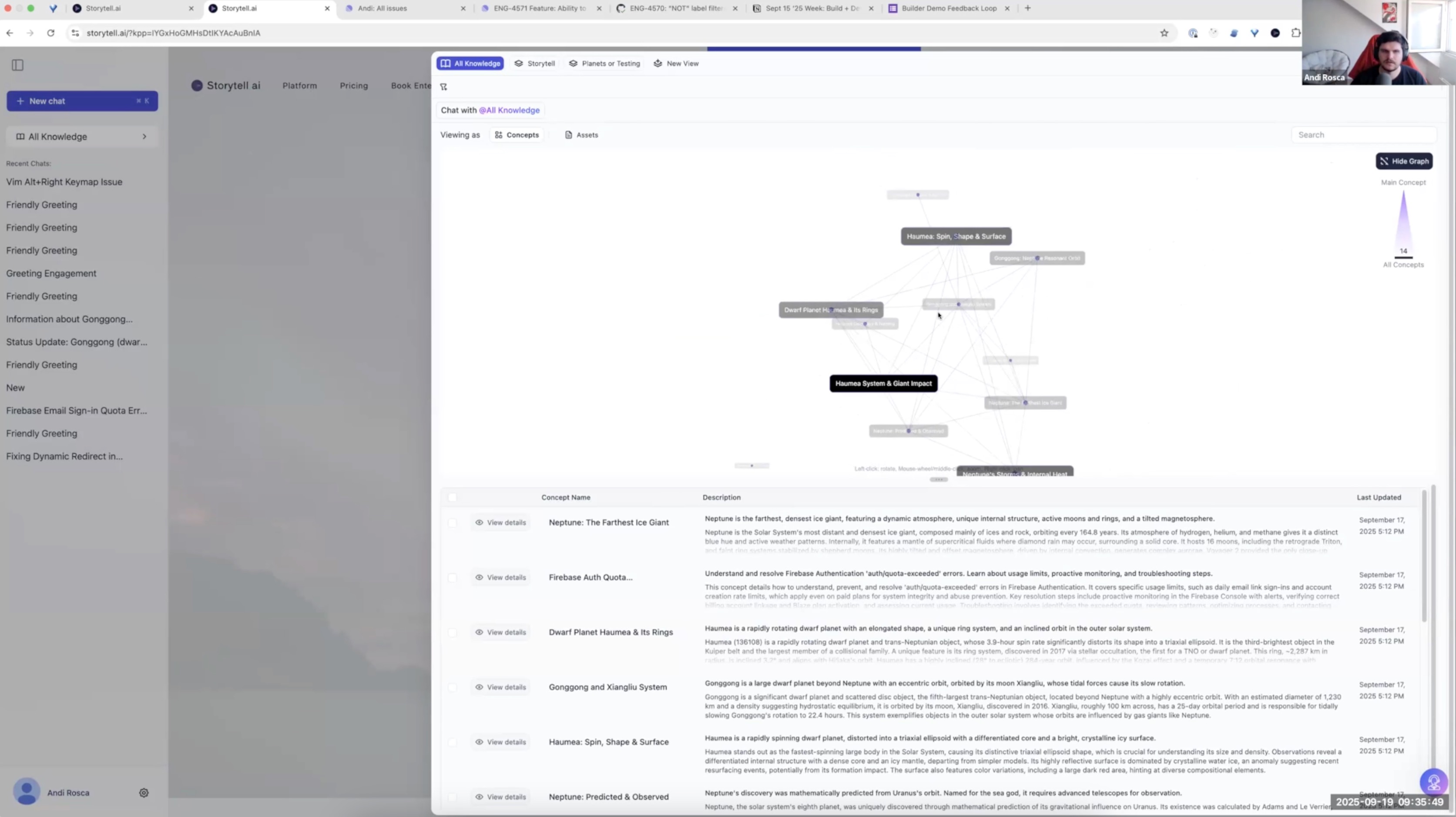

LLMs now drive chat decision making

Storytell’s agentic mode puts LLMs in charge of not only generating answers but also making decisions about when and how to conduct web searches. For example, when answering a query about recent news, Claude will now decide to initiate a web search, select relevant information, and share a step-by-step view of its reasoning. This approach brings more transparency to LLM-driven responses, giving users a clearer understanding of why outputs are generated in a particular way.

Each LLM offers a unique conversational experience. Claude, for instance, provides detailed “thinking” steps, while Gemini follows a slightly different pattern. Both present their intermediate reasoning directly in the chat, and users can see when models choose whether or not to invoke additional information retrieval methods, such as live web search.

Significant performance improvements

With this architectural overhaul, Storytell chat now delivers results much faster. Tasks that previously took up to a minute now complete in just a few seconds. The system’s web search integration has been refined so that LLMs are aware of the current date, pulling current and accurate information for queries about recent events or time-sensitive topics. This benefit is immediately useful for research, reporting, and decision making that requires up-to-the-minute information.

Additionally, users can control LLM actions using plain language—for example, instructing the system to limit web searches to two results and run them in parallel. This provides advanced users with more power to tune data-gathering behaviors and optimize for speed, breadth, or specificity as needed.

Become an Alpha or Beta Tester

Get early access to features as we release them by becoming an alpha or beta tester. Here's how to sign up: https://docs.storytell.ai/about/early-access

Gallery

No items found.

Changelogs

Here's what we rolled out this week

No items found.