Share this post

How to Build AI with Integrity — Why I Started Storytell

May 28, 2025

.jpg)

How to build AI with integrity — Why I started Storytell

I’ve always believed that the stories we tell about ourselves, our work, and our relationships shape the world we live in. In April 2022, I read this cover feature from The New York Times Magazine titled "A.I. Is Mastering Language. Should We Trust What It Says?" When I saw them use AI to re-animate Italo Calvino's voice, I was enthralled. The wave of generative AI was here.

Alongside my excitement, I wondered, how do we do this right? AI is only going to be more powerful and more immersive. So what does it look like to build with integrity? How can we have tech that awakens our consciousness instead of numbing it? I thought the best way to answer that question was to build an AI company.

At the time, I was running a founder community and witnessing the mountains of unstructured data around us, especially as we recorded the video and audio for every call. There were valuable moments in those calls, but unless you were an incredible note taker, they were almost impossible to extract.

With my co-founder, we decided to build a tool that could bring all of that messy, vibrant information into structured, thoughtful workflows.

Welcome to Storytell.ai.

Early roots in shared purpose

My early life was shaped by communal living. I was born in a commune, surrounded by people who believed that how we live together and how we share resources and stories shapes the future. That taught me early on that building systems isn’t just about efficiency or scale. It’s about values.

So when I saw the generative AI take off, I felt a deep sense of responsibility. I knew the decisions we make today will become the defaults of tomorrow.

Excited about the future; committed to doing it right

From the start, I was captivated by what generative AI could unlock, especially in creating more responsive, more intuitive digital companions. But I also knew that this future wasn’t guaranteed to be humane. The window to build it right is limited; we won’t have a reset button in five years.

What Storytell is and how it’s designed

Storytell is essentially a cursor for unstructured data. It helps teams organize knowledge, expand ideas, and communicate better using generative AI.

From day one, we focused not just on what Storytell could do, but on how it did it:

- Transparency by design: Users can see how a prompt is structured, what knowledge it draws from, and where claims come from.

- Context that stays with you: Outputs are grounded in your own materials, so the LLM reflects your institutional knowledge, not generic internet data.

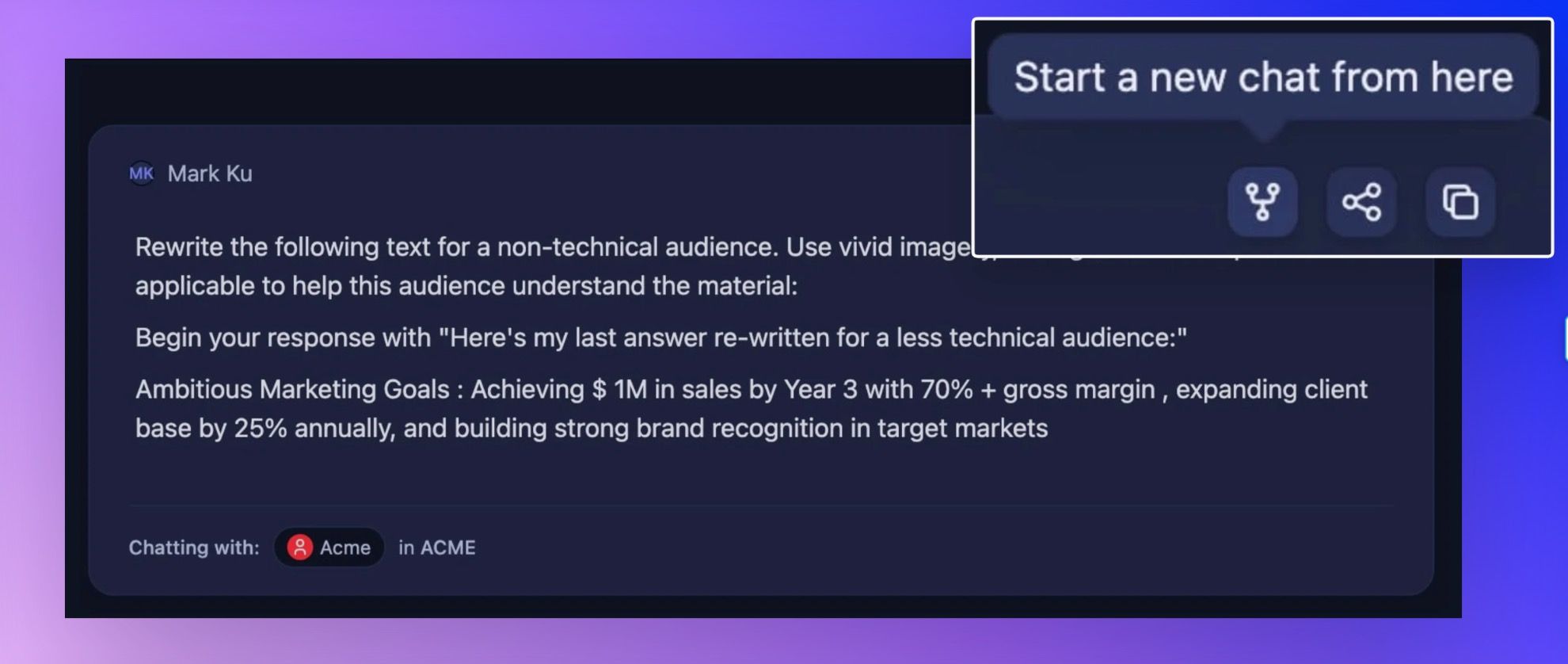

- Dynamic LLM router: Instead of locking in one LLM, we built a flexible router peed, accuracy, cost, or nuance.

- @Mention feature: You can directly mention files, conversations, or notes in your prompts to give the LLM full context. This helps keep key insights and references at your fingertips, ensuring outputs reflect the most relevant and trusted information.

- Human-led workflows: Storytell is built for collaboration between humans and AI. You choose the prompt, define the context, and stay in control of how the response is shaped so the LLM becomes a thought partner, not an autopilot.

- Collaboration at the level of data: our product de-silos teams by allowing them to collaborate on the same data set and in the same chat threads.

- Inline citations: You’ll see references to the sources behind each response, letting you verify and trace information with confidence.

Our users tell us they feel like superheroes — not because they’re doing everything alone, but because they’re finally equipped with tools that amplify their impact and create space for work-life balance.

Integrity in practice

To build AI with integrity, we’ve embedded principles into our product process:

- We publish changelogs so users can track how Storytell evolves.

- We test not just for accuracy but for usefulness and alignment with real-world context.

- We put users at the center of our building experience, treating feedback as part of the design process.

- We create room for experimentation while staying grounded in ethical guardrails.

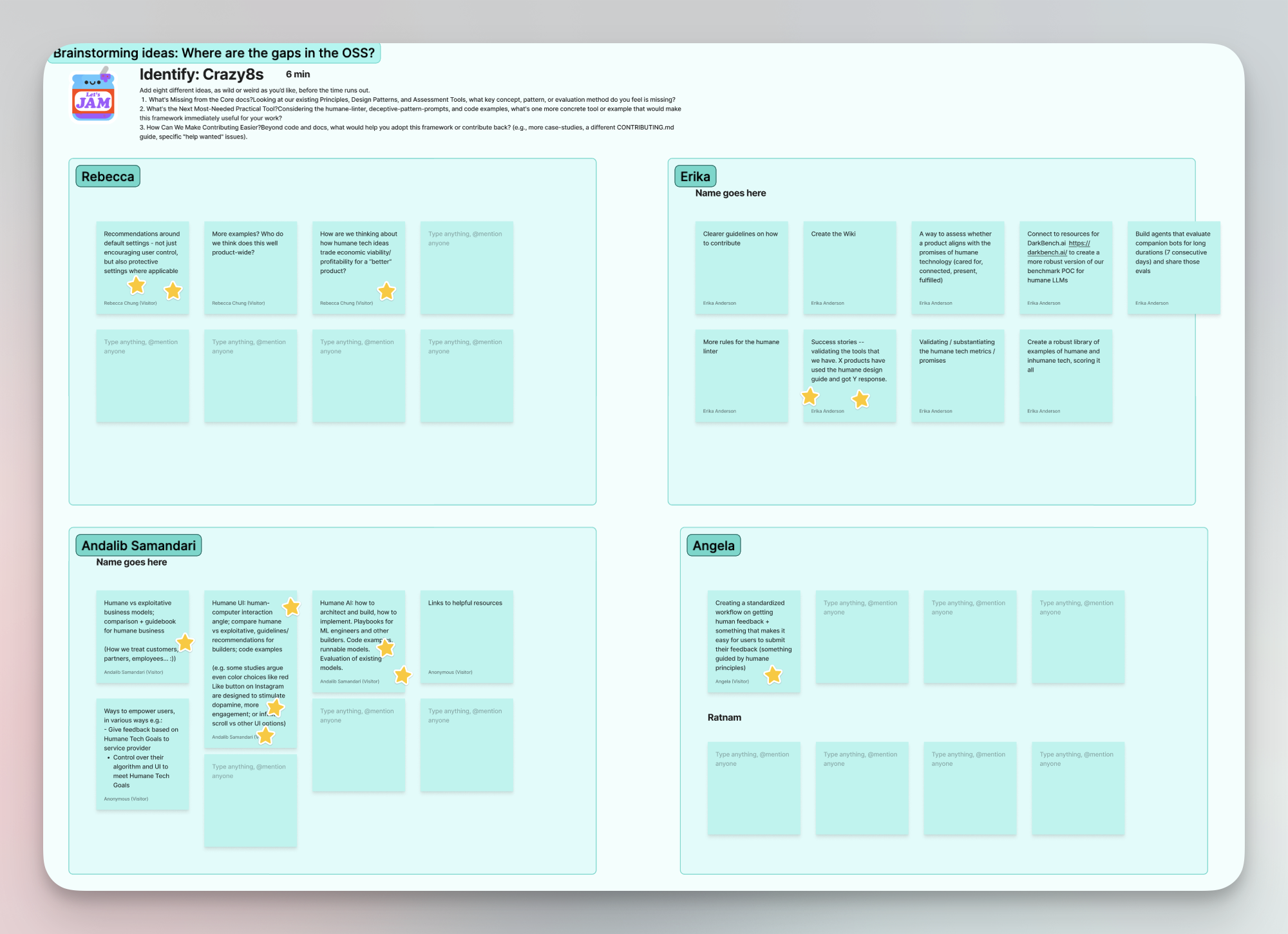

This is also why I started Building Humane Tech—to bring others into the conversation. Whether we’re prototyping better onboarding flows or co-creating new metrics for accountability, we believe this work can’t happen in silos. Integrity is a collective effort.

The future we’re shaping

We’re still learning, refining, and asking the questions that matter. The truth is we'll always be learning. I’m proud of what we’re building with Storytell: a platform that turns unstructured data into shared insight, making LLMs work in service of human understanding, with users at the center of every interaction. We’re not just shaping a tool—we’re shaping a new way to work with technology, one that values context, care, and collaboration.

For me, that’s the real promise of Storytell. It’s not just about making generative AI more accessible or powerful. It’s about building systems that honor the complexity of human thought, that make our ideas clearer and our conversations richer, and that leave space for the questions we’re still figuring out together.

To make it sustainable, humane tech can’t be ad-hoc measures, but a scalable system of frameworks everyone can use. why we’re building an open source humane tech repo to aggregate all existing frameworks and contribute our own, such as humane tech metrics and a humane linter.

Gallery

No items found.

Changelogs

Here's what we rolled out this week

No items found.