Share this post

Crafting Ethical AI: Insights from our workshop on Algorithmic Bias

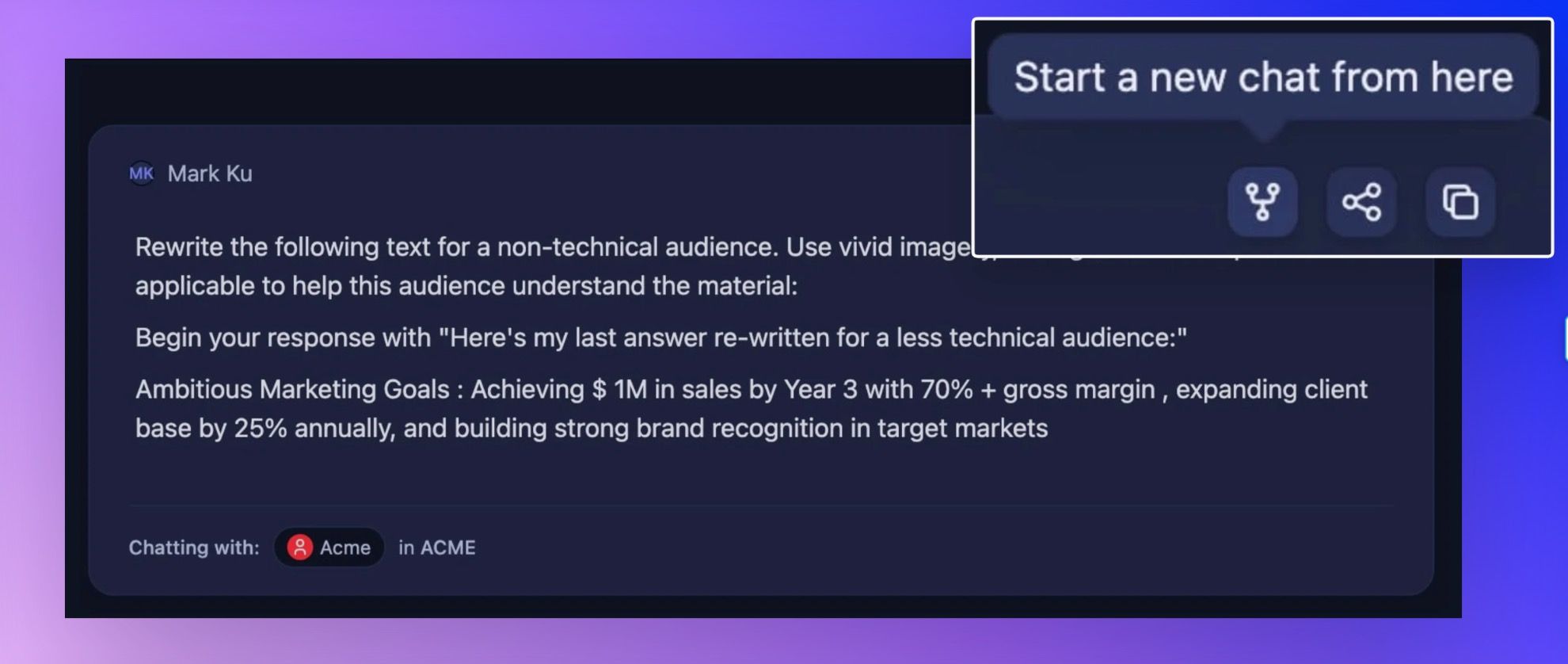

Alix Rübsaam's workshop, which has been attended by over 1,200 people, is now open to the public and attracts participants from major companies like IBM and VMWare, as well as smaller startups like Storytell.ai

September 26, 2024

.avif)

Our lives are enhanced by technology, overwhelmed by it, distracted by it, and sometimes, enchanted. In response to this distraction and some cases, harm, a nascent movement is underway. I call it the Humane Tech movement. Here’s how I’m taking part: hosting a humane tech meetup in the Bay Area, and, bringing its principles into our startup as we find the capacity to do so.

I give the capacity caveat because we’re running a business. And in order to run it, we have to build it. What startups don’t have is time; we’re careful to protect our engineers’ build time, otherwise, we don’t have a product, customers, or investors. And yet, we decided it was worth it for our globally distributed crew of 12, to take part in a two-hour algorithmic bias workshop with Alix Rübsaam, a researcher in the philosophy of technology for Singularity University. Rübsaam has led 1,200 people through this workshop, from Fortune 500 executives to the Peruvian Congress. Having made the workshop open to the public, we were joined by data scientists, engineers, account execs, and product managers from large companies like IBM and VMWare and smaller startups, too.

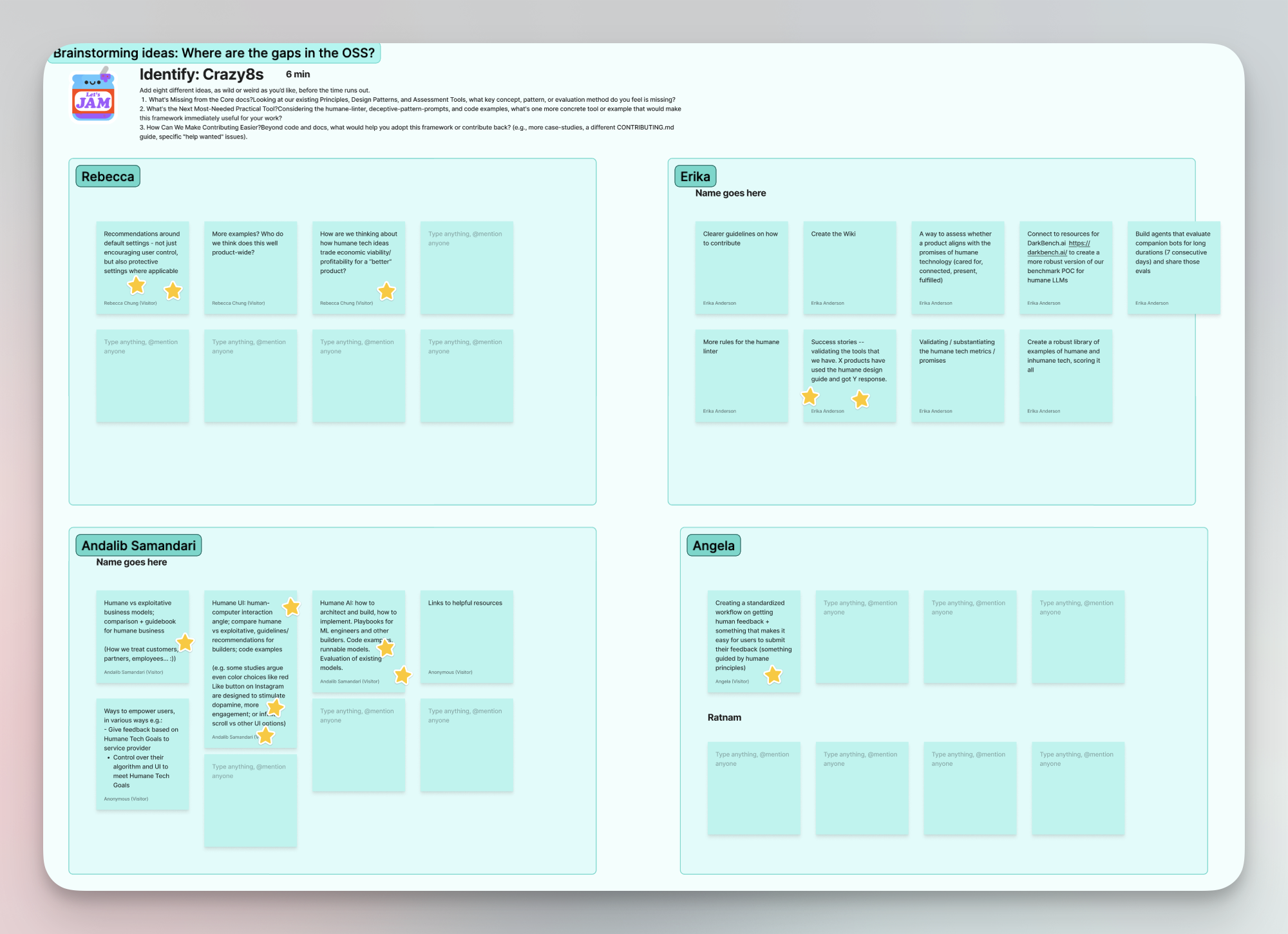

What I appreciate most about Rübsaam’s workshop is that it’s entirely experiential and interactive. You use your own data, build algorithms in small groups, and feel the weight of becoming decision-makers. So much of the algorithmic world feels off-limits to those of us who are not machine-learning engineers (and there are only 150K of those in the world – .001% of our global population), yet this workshop makes it entirely accessible.

My main takeaways:

- Algorithms are a reflection of the messiness of human life

- All algorithms operate according to some rules, even if we tend to separate them into machine-learning algorithms and rule-based algorithms

- It's important to share what the algorithm can and can’t do

- There’s no such thing as a “bias-free” algorithm. In order to function properly, an algorithm needs to be biased toward something.

- The bias is the design – without it, algorithms wouldn’t be useful or functional

- Then there’s unintended bias, which should be tested for and addressed

As you’re building algorithms, ask these questions:

- “How might the data I’m using influence the outcome or the results?

- What assumptions do I have about the questions I’m asking?

- How does my background or the context within which I’m working influence the kind of solution I come up with?

- Who would feel included in the data that I used? Who might feel excluded and why?”

To these points, Rübsaam recommended a leader in the field: this project helps data scientists maintain the integrity of a dataset. She also pointed out that in order to navigate these questions, you need psychological safety. That’s important to us, too. One of our values at Storytell is Clean Communication, a framework I created to cultivate deeper levels of understanding in the workplace.

We’ll be wrestling with Rübsaam’s questions as we work on our own Large Language Model. For all of you who are building algorithms, how are you addressing these questions? What decisions are you making? Are you clarifying what users can do with your algorithm and what it simply won’t work for? Take a look at Simply News to see how they describe their algorithms.

One reason I co-founded Storytell was to explore what it means to build AI with integrity. This week’s session was a powerful step in that journey. We are committed to confronting these questions head-on and ensuring that the technologies we create contribute to a humane future. If you’re in the Bay Area and interested in these conversations, I encourage you to join our monthly Building Humane Technology meetup.

Together, we can build AI that not only advances technology but also upholds ethical principles and serves humanity as a whole. See you at the next workshop!

Resources:

- Automating Inequality by Virginia Eubanks

- The Alignment Problem by Brian Christian

- Unmasking AI by Joy Buolamwini

Gallery

No items found.

Changelogs

Here's what we rolled out this week

No items found.