Share this post

Removing the Grenade: What AI can learn from the DevOps Movement

Employees and IT Departments are facing off over AI in the enterprise

May 15, 2024

Fifteen years ago, we faced a serious misalignment between developers and IT departments—a battle between creators and the organization’s guardians of safety and security. This “fatal dysfunction” inspired me to build my last startup, which commercialized an open-source CI/CD software delivery platform that helped some of the world's largest companies ship code safely to production, with velocity.

An entire DevOps movement sprung up around this, and we were proud to bring it to Global 2000 and Fortune 500 companies, helping developers, operations, and IT realize they needed to move to the same side of the table from opposing sides. Our customers overrode the classic tension: developers, who typically want to ship code as often and quickly as possible, are often at odds with operations and IT teams, who typically would prefer that code be shipped as safely and infrequently as possible to maximize the stability of the company's infrastructure. If you make the right investments in culture and in infrastructure, you can sidestep the false choice of thinking velocity comes at the expense of safety.

AI has caused employees and IT departments to face off across the table, just like developers did 15 years ago before DevOps, when "IT operations and software development communities raised concerns [around] what they felt was a fatal level of dysfunction in the industry." - Atlassian

Why am I talking about this now? Because I’m seeing the same thing at play with AI. AI is this magical assist that allows any knowledge worker to suddenly be a builder and a creator. It’s empowering knowledge workers with a new skill set and making them look a lot more like developers did in the past, which can make them seem dangerous to the organization. The "code" grenade that developers were throwing over the wall to IT teams before DevOps has transformed into the "AI" grenade today.

AI is not mature enough today to have gone down the journey that ultimately created DevOps, but it needs to in order for humans to flourish—using AI. Otherwise, the enterprise will be at odds with the knowledge workers.

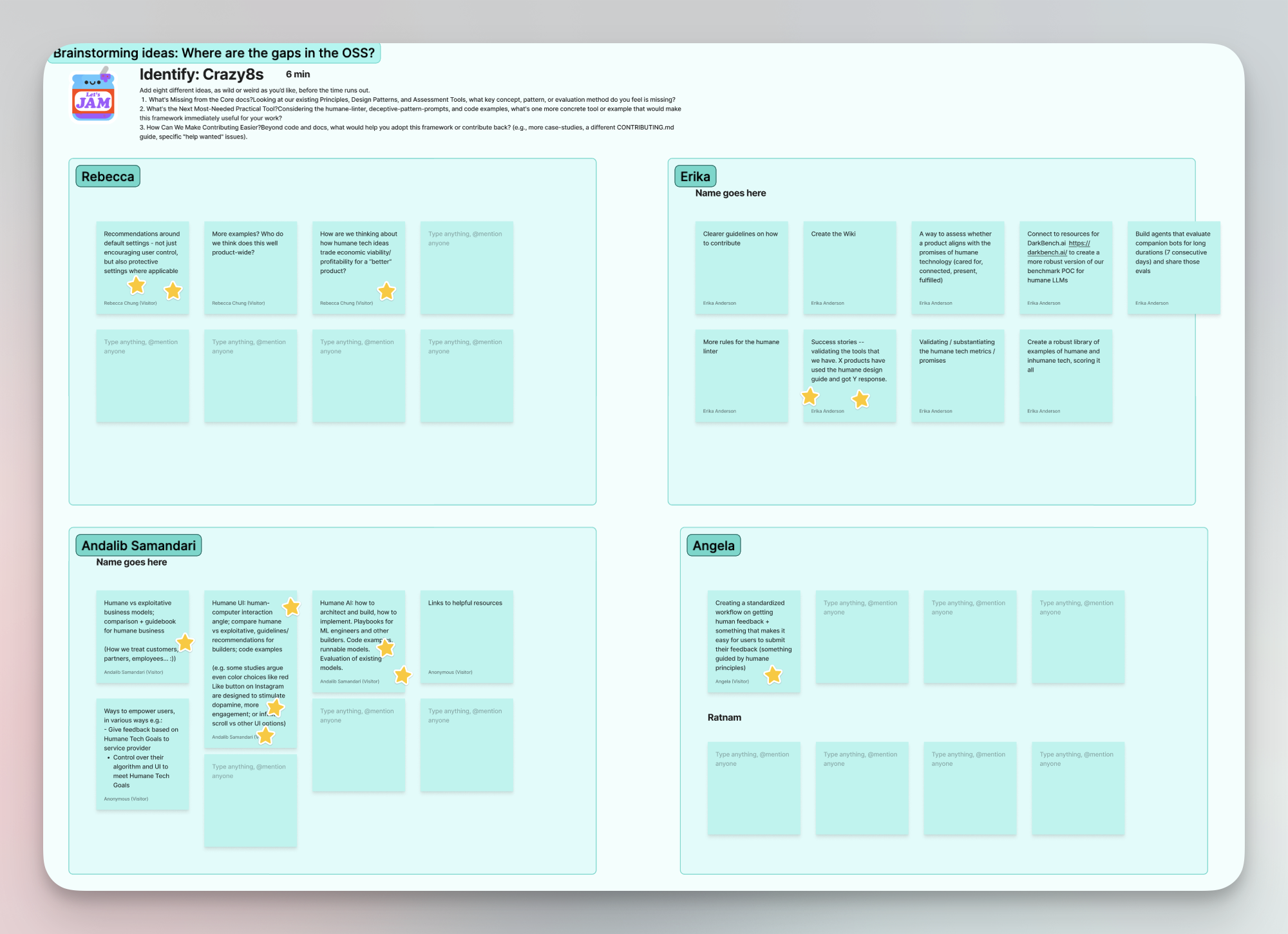

DevOps was both a cultural shift between two opposing departments, as well as an investment in infrastructure that enabled it. My co-founder and our CCO, Erika Anderson, recently gave a talk to several hundred executives at Singularity University's Future of AI program about how we need to find the intersection of humanity and technology to make this possible. She’ll follow up with a post around the cultural shift about how to bring AI into your organization. For the rest of this post, I'll focus on the investments in AI infrastructure that will enable AI to follow in DevOps' footsteps.

Throwing the AI grenade: The tension between employees and employers

To illustrate what I mean, I’ll share with you how we’re seeing our customers use AI:

One of our users is a VP at a telco company who uses Storytell not only to be more effective in his role at work, but to play Dungeons and Dragons with his friends. Another power user is a VP of client analytics, using Storytell to put proposals together for Fortune 500 customers at work, while also using Storytell in his role as a member of the city planning board, where he uses SmartChat™ to put together and manage grant proposals for his municipality. I see this as an extension of the consumerization of IT trend that started with employees bringing their personal iPhones to work, expecting enterprise software to "just work" across their personal and professional lives.

We are seeing these same employees bring AI into their workplace regardless of the official policies. AI is just too helpful in making us more effective knowledge workers -- to understand better and faster, to see hidden patterns and insights, to communicate more effectively with colleagues, to de-silo work across teams and departments. Companies that are banning AI at work are staring down a tsunami wave of demand from their employee base that will be washing over them in the coming years.

All of this is, understandably, terrifying to the CTOs, CIOs, CISOs and related leaders of organizations whose job it is to keep their companies safe and their data secure. The initial reaction we see is usually one of "command and control" mandates across the organization such that employees either can't use AI at all or can only use it in carefully sanctioned, limited ways. It's like telling employees that they can only check their email on the company-provided phone: Yes, it's possible with enough discipline, MDM infrastructure, and training, but it also comes at a very large cost to the employees who lose the ability to get their work done in the most effective ways possible.

Making AI accessible to employees and safe for companies

The tug between accessibility and safety isn't new -- Steve Yegge famously called it out in his 2011 rant about Google vs. Amazon.

Storytell.ai's vision is to Make Life Meaningful by Making Work Meaningful. Let's dig into how we're achieving that, and what it means for this dysfunction between employees and their companies.

We do this by making AI accessible to the employee base. Knowledge workers don't want to be thinking about things like:

- Which LLM should I be using to get this job done? Do I use Gpt-4-1106-preview? Or Claude 3 Sonnet? Or LLaMA? Or Camel? Or one of the other 333 LLMs becoming available?

- What is the exact right question to be asking in the exact right way to "reach into" the LLM most effectively to get the best answer back?

Knowledge workers want AI to "just work" for them. For AI to be accessible in their daily lives, in their workflows, as their partner. They typically don't care what's under the hood. But

Making AI safe for organizations. Companies want to be able to control things like:

- Which LLM is being used for which task? Can we route the sensitive tasks from the finance department to an on-prem open-source LLM so our data doesn't leave the building?

- How is my data being secured, permissioned, and governed? How do we ensure that the right employees have access to the right information, and they keep it secure while using AI? How do we make sure that our sensitive PII, financial and proprietary data doesn't get into foundational training models?

- How do we control and manage costs while ensuring that the right departments are being billed?

There are many more bullet points each of the lists above, but you get the idea -- the employees and the IT departments are facing off across the table from each other, and the dysfunction due to this misalignment of interests is just beginning.

Storytell is building the enterprise AI platform making AI accessible and safe.

When employees can do higher-value work and have AI assisting them, they literally feel like superheroes. Here's a video of Cheryl Solis, a technical writer who's found work-life balance for the first time in her career thanks to Storytell:

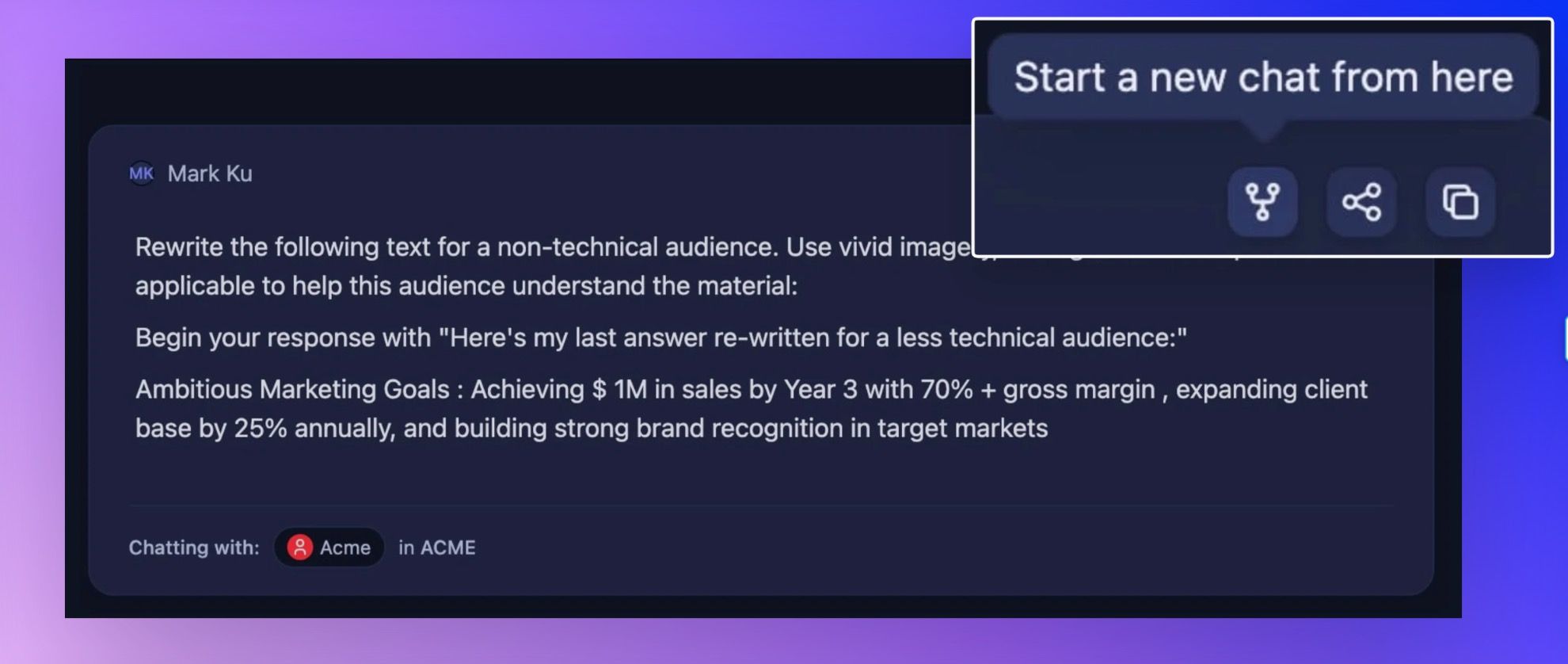

Storytell's focus on leveraging the power of the fastest-moving LLMs, like our integration of GPT-4o this week, combined with SmartChat™'s knowledge tagging system that allows employees to share knowledge across the company, means knowledge workers like Cheryl, Stephen and Grant can focus on doing more meaningful work inside their organizations.

Likewise, our focus on safety for enterprises is achieved by integrating a Data Plane, Management Plane, and Model Plan, with an LLM Router, LLM Farm, Persona Profiles and more into our infrastructure. This gives companies granular control over the way their employees use AI within their enterprises.

Additionally, we're tying it all together via not just human interfaces for employees, but and SDK and API for an enterprise to extend Storytell's platform across its systems programmatically.

If you're interested in understanding how Storytell is making AI accessible to employees and safe for the enterprise, you can contact us here or just email Hello@Storytell.ai. You can also try using SmartChat™ yourself right here. We're looking forward to helping get the IT department on the same side of the table as the workforce so can all make life meaningful by making work meaningful, collaboratively.

DROdio, CEO @ Storytell

PS: If you're an AI-curious executive, I recently wrote a personal post on how I use AI myself as the CEO of Storytell.

A big thank-you to Singularity University's Future of AI program last week -- that's where I realized the parallel between the DevOps and the state of AI today.

Gallery

No items found.

Changelogs

Here's what we rolled out this week

No items found.