Share this post

Regulation as a Launchpad: My Takeaways from TechCrunch Sessions: AI

June 6, 2025

.jpg)

Yesterday, I spent the day at TechCrunch Sessions: AI in Berkeley, where the conversations spanned everything from the latest breakthroughs in generative AI to the cultural forces shaping our industry. But one session stood out for me: The AI Policy Playbook: What Global Startups Need to Know.

This breakout session brought together voices from the UK Department for Business and Trade, European Union, and Global Innovation Forum to tackle a challenge that often gets overlooked in the excitement of new technology—how to navigate policy, regulation, and global expansion in an AI-driven world.

Gerard de Graaf captured the stakes with a line that drew both laughter and reflection: “The average food truck in San Francisco is more heavily regulated than OpenAI.” It was a stark reminder of the gap between our enthusiasm for innovation and the structures needed to make sure it’s safe and fair.

What I appreciated most was the session’s shift in framing. Regulation isn’t about slowing down progress; it’s about making sure the impact of technology aligns with human needs. As Gerard put it, “We don’t regulate technology. We regulate the use of technology, especially where it affects important aspects of someone’s life.” In that perspective, policy becomes a launchpad for building trust and a competitive edge, not just a checklist to get through.

Of course, the complexity is real. The panel touched on the nearly 2,000 AI-related bills in the U.S. and Europe’s significant investments in supercomputing and data spaces to create a more ethical, equitable playing field. It can feel overwhelming. But as a founder and builder, I see opportunity. When we start with privacy and fairness, regulation isn’t a burden but a foundation of trust and a shared starting point for innovation.

Another highlight was the conversation about the future of generative AI agents. Today, about 10% of enterprises use them, and in just three years, that number is expected to soar to 82%. It’s a powerful signal that governance and policy need to move with the same urgency as the technology itself.

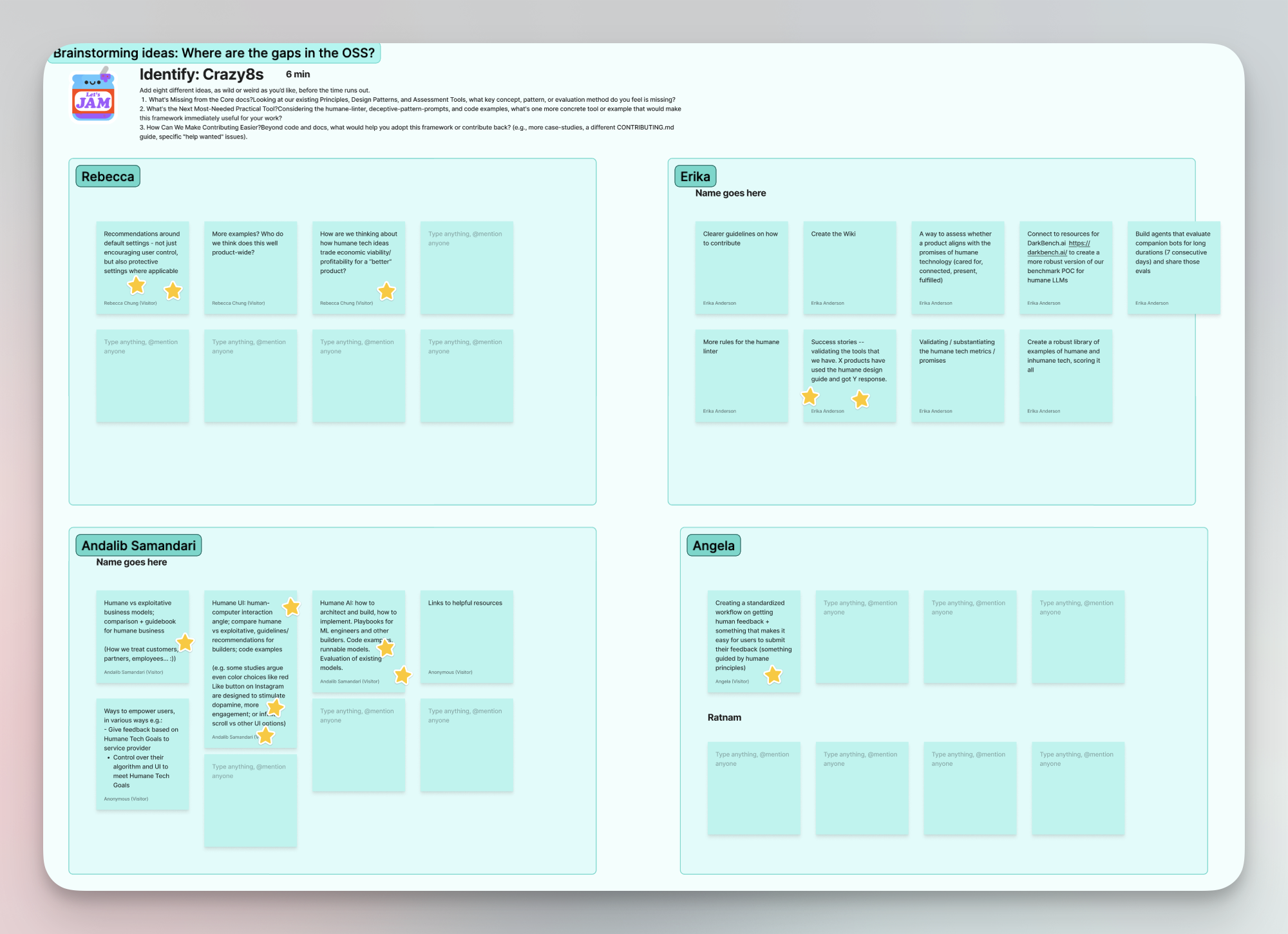

This session connected directly to the work we’re doing at Storytell and through our humane tech initiatives. We’ve integrated our humane tech metrics as a way to build with intention, using clear criteria like transparency, user dignity, and alignment with real human needs to guide how we create and deploy our products. These metrics help us see regulation not as a constraint but as a design tool. They encourage us to ask: Are we using data responsibly? Are we enabling people to understand and control how AI affects their lives? Are we building systems that strengthen, rather than erode, human trust? Seeing these themes reflected in global policy conversations reminded me that our metrics and frameworks aren’t just for us. They’re part of a broader movement to ensure technology stays accountable to the people it serves.

As I left the session, I felt more convinced than ever that policy and design belong at the same table. If we’re serious about building AI for the public good, we have to treat regulation and human impact as central to how we build, not afterthoughts. The food trucks of the world might seem small, but they hold a big lesson: if we want to make AI work for everyone, it has to be as safe and trusted as the food we eat.

Gallery

No items found.

Changelogs

Here's what we rolled out this week

No items found.