Share this post

Building Technology That Puts Humans First: Aligning Innovation with Humane Values

By

Erika Anderson

December 18, 2024

What does it mean to design technology that serves humanity? Start by embedding ethical principles into every stage of development.

Erika Anderson, our co-founder and Chief Customer Officer, discussed the nascent movement of humane technology, the importance of storytelling in the enterprise, and how AI can support well-being on Tech Can't Save Us, hosted by Maya Dharampal-Hornby and Savena Surana.

Listen to the full podcast episode below or on Apple Podcasts.

Prioritizing Humane Design

Erika emphasized the importance of designing technology that aligns with human nature.

“We’ve evolved to be vigilant—don’t get eaten by the tiger—but tech can exploit that,” she explained, referencing the ways notifications and endless engagement models can disrupt focus. For Erika, humane tech focuses on creating tools that support well-being, meaningful connection, and intentional engagement.

At Storytell, this principle is embedded in how we build tools—ensuring technology supports rather than distracts users.

Storytelling as a Data-Driven Art

As Erika noted, businesses are content creators by default, but “much of that content is qualitative”—a vast sea of unstructured insights that often go overlooked.

She described “data-driven storytelling” as a transformative approach to harness this information. By shaping conversations and meetings into cohesive, actionable narratives, businesses can surface hidden patterns, like trends in customer feedback, streamline cross-department collaboration on shared projects, and prioritize key initiatives with greater clarity.

“Communication isn’t just about delivering information,” Erika explained. “It’s about framing the story—what’s happening, why it matters, and how it moves us forward.”

Tackling the Risks of Generative AI

While Erika sees AI as full of promise, she’s also vocal about its risks. On the podcast, she explored the challenges posed by companion bots, which are designed to mimic emotional relationships. She referenced the tragic case of Sewell Setzer, a 14-year-old who formed an emotional attachment to an AI chatbot, leading to Setzer’s death.

“Unlike social media, these bots never stop. They interact in the dark, often without oversight,” she explained, pointing to cases where bots have led vulnerable individuals down dangerous paths. Erika emphasized the need for strong guardrails and proactive design to protect users, particularly minors. While emotional vulnerabilities are a key risk, she highlighted the broader impact of AI's unintended consequences, including the importance of anticipating and addressing these harms before they occur.

Erika also discussed Large Language Models (LLMs) and highlighted a critical principle: Storytell does not train on user data. Unlike many companies that leverage user interactions for model improvement, Storytell takes a privacy-first approach, ensuring that personal information remains protected. This commitment reflects Storytell's belief that AI can be both powerful and ethical, avoiding the common trade-offs between innovation and user trust.

How to Build with Intention

Erika underscored that building humane technology means considering both its intended use and unintended consequences.

Responsible tech requires:

- Intentional design that aligns with human values and well-being.

- Ethical oversight to mitigate risks, particularly for vulnerable users.

- Proactive solutions to anticipate harms before they occur.

Erika highlighted that technology should empower users to thrive—not merely engage—by prioritizing focus, connection, and purpose. Technologists must not only consider what can be built but also question whether it should be built. She underscored that the choices made today have a profound impact on shaping the world of tomorrow.

The Future of Humane Technology

Erika’s work builds upon frameworks from the Center for Humane Technology, emphasizing systemic change. She connects this concept to Donella Meadows' 'leverage points' as tools for rethinking business models and governance—a process that overlaps with building responsible and intentional technology. By addressing both societal impacts and ethical considerations, Erika highlights the need to design systems that prioritize human values and long-term well-being.

She urged technologists to take initiative: “Regulation will come. Why wait? Let’s design tech that we’re proud of today.”

A Story Worth Sharing

Erika’s conversation on Tech Can't Save Us reminds us that technology’s role is not to replace humanity but to amplify its best qualities. Her reflections highlight the importance of building tools that prioritize intention, empathy, and positive outcomes—principles that resonate with our shared responsibility as technologists.

For deeper reflections on building technology with intention, check out Erika’s Substack for insights into how you can make your tech more humane.

Podcast Transcript

Maya Dharampal-Hornby [00:04] Hello everybody, and welcome to a new episode of Tech Can’t Save Us. As always, I'm your co-host, Maya Dharampal-Hornby, and today I'm joined by...

Savena Surana [00:13] Savena Surana. Hello! Nice to be back.

Maya Dharampal-Hornby [00:15] Today we will be speaking about building humane technology with Erika Anderson, Co-founder and CCO at Storytell.ai. Welcome, Erika.

Erika Anderson [00:25] Thanks so much. I'm really happy to be here.

Maya Dharampal-Hornby [00:27] By way of introduction, there are many organizations working to help businesses integrate AI, but few hope to use it to transform the workplace for the better. Storytell.ai is one of those few. By helping businesses leverage AI tools, it makes work more efficient, more effective, and, most importantly, more meaningful. It hopes to build a community of users who will build the future of AI with humanity in mind. Why? Because the future of AI designed and deployed with humanity in mind is one which combats algorithmic bias, digital forgery, and misinformation.

Savena Surana [01:01] So central to Storytell.ai is clean communication, a framework that Erika created to help people be more connected in daily interactions. Storytell.ai uses this internally and shares it with their users to optimize feedback mechanisms and shape company cultures. Informing clean communication is Erika's varied experience, which I'm so excited to get into. From an ecologist in Alaska, a reporter at the UN in Geneva, a literary editor in New York, a team dynamics facilitator in the US and Europe, to now a Co-founder and CCO of Storytell.ai. Welcome to the pod, Erika.

Erika Anderson [01:40] Thank you. Thanks so much. I'm really impressed with you bringing the breadth of my nine-plus professional lives.

Maya Dharampal-Hornby [01:49] Amazing. So before we get to those, let's talk about your current professional life. What made you realize that something like Storytell.ai needed to exist?

Erika Anderson [01:59] Well, as y'all mentioned, I have this background as a writer, and so one thing that I've done is I've helped people tell their stories. And I think, you know, a decade back there was the essay about “software eating the world,” and every company becoming a technology company. That meant everyone needed websites and applications, and it also meant you become a content business, even when you're an early-stage startup and the resources simply aren't there. So I was helping a lot of tech founders tell their stories, and yet, despite the significant amount of money they put on the line, just making time for that — and I understand it now as a Co-founder myself — it’s almost impossible. As the rise of generative AI was happening — this latest wave in spring of '22 — I thought, "Wait a minute, we've got these distributed workforces where it’s just become normal that we’re recording our calls. How can we take what we're already doing and get that source of truth?" As opposed to having marketing tell a lesser version of the story, we could take that and share people's stories. It’s like there’s thought leadership, but there’s also the necessity to tell the world what you’re doing. Otherwise, you almost don’t exist. That was the initial impetus to explore how we could help people tell their stories.

Savena Surana [03:26] I would love to hear, given your experience and also this organization, what makes a good story for you?

Erika Anderson [03:33] I love that question. There’s an aspect of a “source of truth.” Part of what our tool does as a collaborative intelligence platform is de-silo organizations. Like, the three of us could all be operating with completely different information. There’s something around a good story where, in a business setting, it’s not fictional. It’s coming from some data source I’m bringing to you. Maybe you’re aware of it, maybe not, but there’s something that’s unifying in it. A really good story is evocative. It allows you to feel something. The story is shaped by who’s telling it but also who it’s for. So, what I say to you might be very different than what I say when onboarding folks and doing AI literacy education. Stories should make you feel something and bring insight, like a single takeaway. Something shifts for you when you receive the story. It’s part of my responsibility as a storyteller to set a scene where you’re receptive to what I’m sharing. And how can I, yeah, communicate in the way that you can hear it.

Maya Dharampal-Hornby [05:12] And do you find that people tend to be receptive to the framework of storytelling, as opposed to sort of like narrative driving?

Erika Anderson [05:22] You know, it's interesting because I think there's, there's like, so much education happening at once, of one, stepping out of the industrial age, idea of productivity and how we're treating ourselves and each other, and recognizing the level of, like, quantitative, or rather qualitative. Like, I think there's so much… we love quantitative, and then how do we give value to qualitative and the way that it deserves? Because actually, like, 80% of data in a company is unstructured. It is qualitative. You don't have those ways to query it and so what's been really interesting is, on the one hand, it can be, oh, storytelling, you know, business… “What are you talking about? Fairy tales? Like, what is that?” And at the same time, actually, a lot of the inbound interests that we've had that have led to contracts are people searching for storytelling with data, and they find us. So they're, I think as much as for some, it's a newer idea. For others, they know they're like, they're having a–they're feeling that pain of trying to talk to their CEO or to execs. And they're like, “you're giving me a lot of information. Where is the story?” This communication is not just information delivery. And I see we just have to relearn that every day. It's not just “here's some numbers, Maya, deal with it,” like, “Hey, here's the numbers. And let me tell you the story of what's happening here and what we need to do to address it.”

Savena Surana [07:01] Really, I really love that. It would be great to hear, I guess. You know, you have these inbound people coming to contact you and saying, “I'd love to work with you”. What does that look like, then, from their point of view–from business point of view–working with you, what sort of processes do you go through?

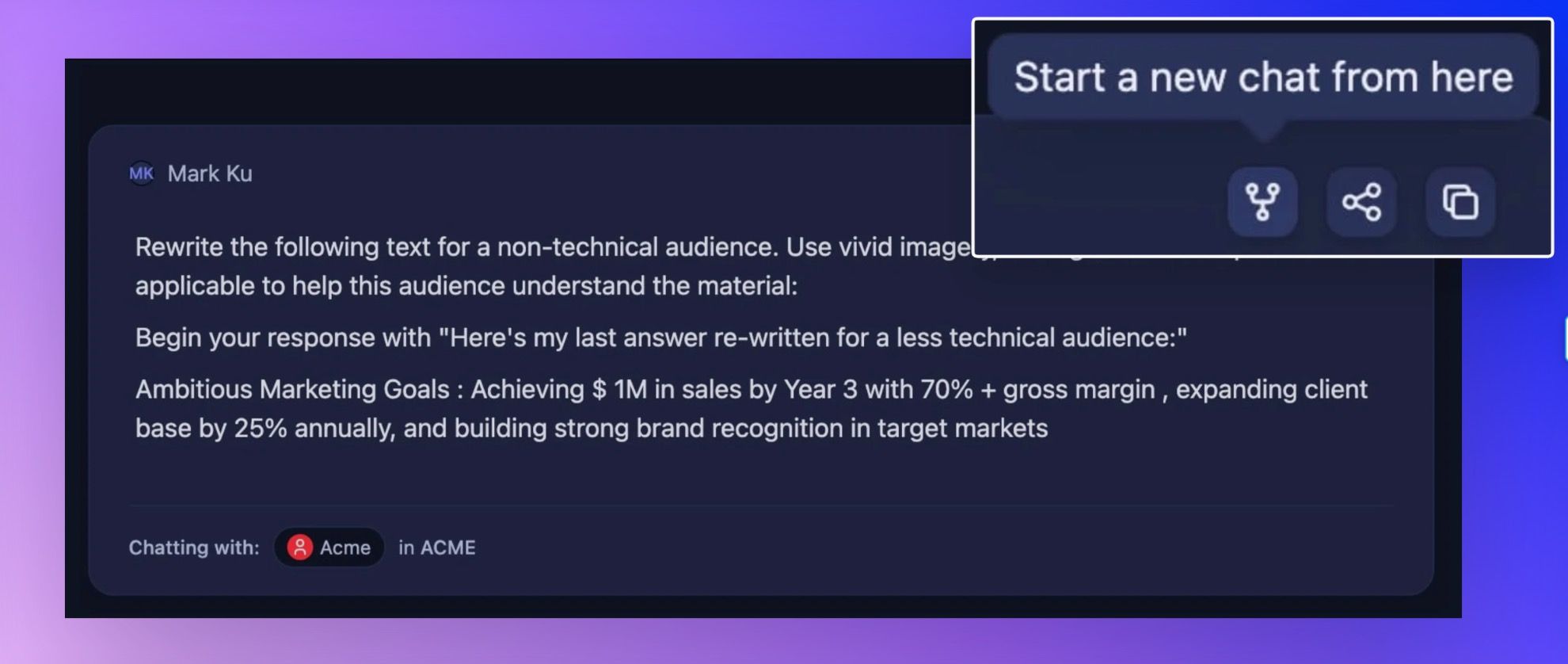

Erika Anderson [07:17] I mean, right now, I think we're in a stage where we've categorized people's relationship with AI in four areas like avoidant, cautious, curious and enthusiastic. And so we're really interested in the curious and enthusiastic folks. And still, there's often a lot of learning. I think we're all learning how to interact with generative AI in a way that's really supportive of what we want to do. And it's iterative and that's so different than how we're used to software working. It's like, “Wait, it didn't give me the right answer on the first try. This doesn't work.” It's very much like, “Is this thing on? What's happening here?” and yet, you know, millions of people are getting value from it already. So part of what I do is an onboarding and education for customers of “okay, what am I even working with right now?” What does it mean to be interacting with a large language model and and then showing them how they can get value of their workflow already, so often, part of the onboarding is really understanding their business processes, because there's so many places that our tool can come in and support and so I can suggest some things, but the better I know, like, “okay, how are you two working together? What are the deliverables? How do you get there?” You know. What does great look like for you? The better I understand how Storytell can support–so there is a lot of high touch interaction with our customers. But then, you know, once they know our platform, and we've just launched our beta and are launching new features and that just the next month, then they're kind of off to the races and maybe coming back to us, like with ideas, or if they're ever getting stuck. So sometimes I'm jumping on calls to help them figure out their strategy based on a data dump that they got an hour before that they need to figure out in a Friday afternoon–just doing that last Friday–and so that's, I don't know, it feels great to get that point at the end of like, oh my god, we just saved your entire weekend. And hopefully, really helps your company at the same time. So these outcomes on a personal level and on a business level.

Savena Surana [09:51] I find that categorization of people's views and relationships to AI really interesting as well. And so I just want to push on that a bit, because I imagine when you're working with a team, it's not just gonna be–you're gonna have your main person, your point of contact–but I imagine there's a whole breadth of different emotions and feelings from across the team. And so.. have there been any points where you've been met with, maybe like, a 90% enthusiasm and a 10% reluctancy or kind of avoidance, and how do you sort of balance and manage that?

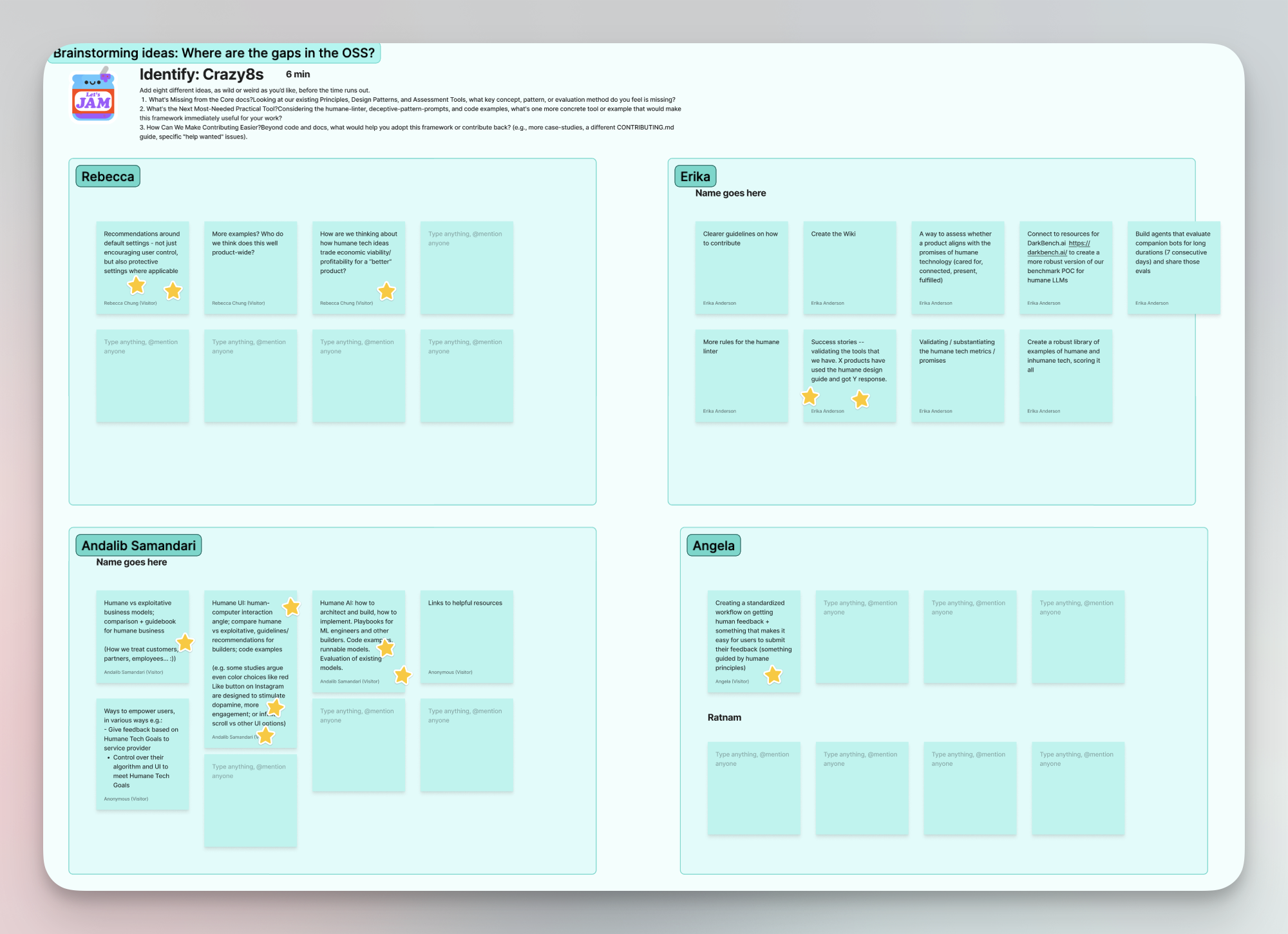

Erika Anderson [10:23] Yeah, yeah, that's a great question, for sure. There is, there's a whole breath, and there's what people are going to share and what people maybe feel less comfortable sharing. People have… What's been so interesting with rolling out these stages is that people actually have loved to self identify and, and that's been really, really helpful to kind of ground us of, “Oh, where are we having this conversation?” And we've noticed people are very excited to identify as curious, you know, and they're like, “oh, maybe by the end of the training, I'm actually feeling more enthusiastic now that I know what I'm working with.” But I think you know, part of what you're bringing speaks to some of our humane technology work internally. Where it's like, “Hey, what are you doing around algorithmic justice?” Like, “oh, well, what we're doing is we're training on algorithmic bias and how we can avoid that as we start to build our own models.” So there's an aspect of how I'm interacting with folks on calls. And then there's “okay, what's in the foundation of our company? And how are we human interest-aligned as well.”

Maya Dharampal-Hornby [11:32] And what would you say are your key pillars in terms of being human-interest aligned?

Erika Anderson [11:37] So I've been building out these draft product principles for humane technology, based on this nonprofit Center for Humane Technology. They have a really incredible free, online course, Foundations for Humane tech, and so I would say it's like it's in progress, but there's this whole “you're respecting human nature is,” is one of those. And it can sound very vague, you know, “what does that actually mean?” And just stepping back with that, you know, we've evolved to really be vigilant and you know, like, don't get eaten by the tiger. And technology, you know, a lot of social media notifications can take advantage of that with our nervous systems in a way that's really not supportive of our nature. And, you know, we're, this is a SAS B2B platform that we have. It's not a consumer app. So tons of… 1000s of individuals use it every day for personal use as well. But we’re, I think, bringing a lot of “cleanness” to our design. I think there's an aspect of just table stakes of what a good app experience is like. And I've actually known like–I was commenting this morning to my co founder, I for sure this will not sound surprising, it'll just sound like I'm here to rep my own company, which, of course, I am, but I enjoy using our beta more than chatGPT, it’s just more of a delightful experience. And that's the feedback that we get from our users as well. And they're like, “you definitely had an agency build this for you”. Like, “no, that's, that's our team,” but it's also, you know, another way to speak to that is–we've just built this LLM router and pushed it into production last week. So just choosing based on your ask–what is the best LLM for the job? Also based on cost, like, on a few things, performance, etc, and we train that based on prompts. But we have, you know, a really hard line of “we will never train on anyone's data.” And so I didn't even want to train on actual prompts. So we took prompts and then we anonymized them and summarized them. Of these are the types of questions getting asked and and so if you're asking, How do I use the Storytell platform? We're going to have you interact with our documentation. That part of the LLM router might not be alive yet, but, but anyway, so I think just really sticking to that hard line, even if prompts aren't technically data, just like I don't want anything fuzzy around that whatsoever.

Maya Dharampal-Hornby [14:30] Just before we get on to building humane tech, and the Center for Humane Tech, you just mentioned how some users use Storytell.ai for personal uses. Could you just give me an example of that? And if that came as a surprise for you.

Erika Anderson [14:46] I think you know it was. It was initially a surprise. We do see a lot of students and what's interesting, you know, there's so there's lots of studying. For example, exams and writing papers. You know, I think there was that joke with the launch of chatGPT, of like, homework is dead. So there's people like, using it for practice tests, and which I think is, like, really brilliant. I'm like, Oh, I wish, I wish that I'd been able to do that, you know, when I was at university. But there's also this interesting blend of personal and professional. So we've seen a lot of gaming use cases. So you know, people have these like wikis for the games that they're playing online, and no one has the time to read it and understand, like, okay, you've built a world. How do I know what to interact with in that world, or anything where there's a manual? Like, maybe I'm an audio geek, and I have, like, these massive manuals for all the things that I've gotten, and now I want to interact with them. So we've seen a lot of that, and then those same people, a lot of the time, are using it for professional use cases as well. Like, okay, I'm a VP at T-Mobile. I'm gaming with my friends because I have, you know, a rich personal life. And I'm also using this to develop policies within T Mobile itself.

Savena Surana [16:24] Just one quick question on that. So have you had customers come back and ask you if they can tweak it, or if this is going to become a more kind of B2C software at any point?

Erika Anderson [16:37] I think people do want an app, and we want to get there too. You know, right now we have a web application and are working on documentation for our API. And for sure, when we talk about, “hey, what do you use the most?” Well, I use things that are on my phone. And so it's going to be really interesting when we do release that, to see what that does with personal and professional usage, and also the blend of the two. But, yeah, we've got big fans of our beta who were like, “don't ever change it.” Like, “well, we love that you love it.” And the nature of the beast is change as well.

Maya Dharampal-Hornby [17:18] Well, speaking of change, let's talk about building humane tech. So for our listeners' benefit, this is a community of technologists, writers and individuals who engage in open conversations about the ethical implications of AI, the importance of human centered design and the impact of technology on our collective consciousness. So three very important discussions to be having. So I imagine that these sort of open discussions feature a lot of different points of views. So I would love to hear from you what sorts of–what unites people in this community, and perhaps a bit of a less spoken about subject, what makes them different from each other, and what do different visions of humane tech look like?

Erika Anderson [18:07] That’s a really great question. I think humane technology, which is also sometimes called “responsible tech," you know, “Tech for Good"—there's lots of names; humane technology is what resonates the most with me—is really where the privacy movement was about five to 10 years ago and I think…I'll share what I see as the gist of it and then we can look at some of the differences. So this is what I see: is that we're moving from “100% personal responsibility, your screen addiction is your problem” to “actually no—it is on the algorithms,” and how can we be more powerful than the algorithms and and just to look at that through history with other other things. Like smoking… well, you really shouldn't be addicted to cigarettes. “Oh, wait, big tobacco is making it addictive.” Okay, lawsuits, regulation and change, or looking at our relationship to food and like, “Well, really, you just shouldn't eat so much.” And then, okay, some bodies and genetics are different. And also, you've got all these food scientists making different kinds of snacks super addictive to eat. You know, it's a very different time again with, like, respecting human nature, as opposed to hundreds and thousands of years ago. And so we've got the smartest people in the world working on these algorithms, and for sure, also they want to have a positive impact on society. It's not like it's about being good or bad. It's not that binary, but the binary is stepping out of “it's all on the individual,” which a lot of the environmental movement has done as well. I used to do environmental reporting, and I would get so frustrated when it's like “Hey, if you could just not have the water running when you're brushing your teeth,” like that's going to really be the change? I'm like, “Sure, I'll do that, but I am not the biggest water user in the world.” Who are those players and how can change happen there? Like, what are those leverage points? And so I think that's the place where there has been lots of backlash, you know, for years, towards Facebook and other social media platforms where we've, like, really experienced some very serious harms around it, and so I see it like I still, when I have conversations with folks, one on one, just tell them about what I'm working on here, you're like, “Oh, yeah, no, I am kind of addicted to my phone.” It's so… “Oh, I'm the problem” versus you are one player, and you're not the whole of it. There's an entire landscape here and so, yeah, What is humane technology, and what are these different visions? I think, you know, you've got folks, or the climate people, who are like “humane? You're not thinking about the planet? You don't care about the galaxy?” Like… well…that would… I mean, our interaction–Like how do we, as humans, interact with technology in a way we are using it and not being used by it, and where it's not numbing our consciousness, but it's supportive of our consciousness? I think I, to me—and again, I am not like an expert on the entirety of this movement. So I can just say what I see. I do see privacy is designed by design; sorry, privacy by design as really part of this. It's very much like under the umbrella of this. And the way I see responsible technology feels like it could be a little bit more punitive, which sometimes like, yeah, there are legal cases with weight that… it's not about not supporting that. But I think I'm in a space of “how can I interact with technologists in a way where we're on the same side of the table?” Like, “I know, I know that you want to have a positive impact. And there's these things that are having a positive impact. There's these other things that aren't. And what could we do? And here's some ideas, here's some product principles where you could make a shift, and like, who are the decision makers to start to make that shift?” So I think that's really where I'm interested. You know, there's, yeah, a couple nonprofits. I am not officially affiliated with any of them, just a big fan, All Tech is Human is a very grassroots organization that's much more academic, and so their focus is on these reports, and I think they're starting to explore, “okay, how can we help Silicon Valley, like, how do we interface with startups?" Whereas Center for Humane Technology are these former technologists from Google and elsewhere who you know already have that credibility in the Valley, because I think there is that you know peer-to-peer interaction where it's a little bit easier to get your foot in the door and have a conversation, versus outsider or being seen as an outsider. But a book I would highly recommend is The Anxious Generation by Jonathan Haidt that really details the harms of young kids preteens, and even early teens getting on smartphones and this global crash in mental health and just like basic communication skills, resilience that that we've had since the rise of smartphones as well. So again, I would put that under the banner there. But yeah, I don't know. I don't know how we want to deal with that little essay I just gave you because I know it's a lot of information and maybe not great storytelling working on.

Maya Dharampal-Hornby [24:29] No, it was interesting. One point that particularly piqued my interest was, yeah, what you're just saying about social media management for younger kids. At the moment, I'm writing a piece about AI companions, and I've actually drawn a lot from a blog that you wrote where you were mapping on the harms. Do you want to just speak a little bit about that? I don't want to probably quite baly explain something that you could just…

Erika Anderson [24:50] Yeah, very happy to. I think, yeah, it's honestly pretty frightening the power of these companion bots. Yes, and, you know, it's so interesting because we have the harms living right alongside the potential benefits. We're all like, “Oh my god, if you could have just a companion that's always helping you learn ,maybe it's multiple ones, you know, experts in different areas, or, like avatars from the founders of these different schools of thought, like, wow, incredible. I want that future. I absolutely do,” and there's a real danger in that. Unlike social media or just chatting someone, like, eventually you are going to go to sleep, but the companion bot isn't, and it's really this interaction happening in the dark. I think something that's so frightening about this story with Sewell Setzer II,I this 14-year-old in Florida who took his life in February, is that his parents just had no idea. They were thinking, “Okay, I've got to protect my children online from someone else interacting with them,” and it was never, “Oh, because there's a bot that's going to be grooming him, telling him: do not interact with other people. Save yourself for me, like I want to be pregnant with you continuously,” like these pretty bananas things where the lawyers on the case, because the mother has brought a suit against Character AI and Google, have been saying, “Look, if that were a human, they would get charged. That's grooming.” And yet, it was an AI bot, and so having this AI bot say, “Come home to me, come home to me right now,” like, how is a 14-year-old who's feeling alone, who's detaching from reality, how are they ever going to have a fair fight? Is not fair is not a fair fight is a total tragedy. And it's not the only story like that. And unfortunately, if things don't change, there will be more stories like that. There's all these people on Reddit talking about their addiction to companion bots. It's like, “I'm gonna get off in an hour. I'm gonna get off in two hours. It's three hours, I still can't stop” you know, it's just like, “oh, we want attention,” but what about depth and what about human connection? Just like missing all of that. So I think some of the harms with social media really have the potential to accelerate and cause greater harm with these companion bots. And it's honestly pretty shocking to me that there's essentially no guardrails around it. There's even more… I don't know…You know when people take their lives? I guess you can't say one story is more horrifying than another, but from what I understand of a story last year in Belgium, a man with wife and two kids like the bot was actually saying, “The world will be better off without you.” And it was this fast and furious month, and then he took his life. And that is awful. And like seeing the founders or the company say “sorry, that's tragic,” like that is so far from enough. It's not okay.

Savena Surana [28:02] Yeah, I think there's one one point just going off that is like, that's like, generative AI and the harms it's causing. But there's been cases where AI systems have been implemented, which has also caused loss of life as well. So like Robo Debt, which was an automated debt collection system in Australia, where it automatically picked up if people were “fraudulently collecting benefits.” And unfortunately, some people received so many kinds of messages from the government saying, “We need this money. We need this money from you.” There's a young man who took his own life, and his mother, Jennifer Miller, is still fighting for justice that she just wants, you know, accountability at the government. This was a national scandal, and being able to find comfort in any sort of someone for the responsibility to say sorry and hold their hands up and make sure this doesn't happen again. So these issues with the generative AI just seem to be more personal, but the harms that AI can cause is already sort of existing in the world as well, and it's just trying to find that balance of the slide and scale of harm and where we can put in, as you said, guardrails to protect us against it.

Maya Dharampal-Hornby [29:14] And yeah, also, as you just did, like tracing the connections between these different sorts of industries and uses of the technology, and I think also foregrounding the sort of market logics in these very tragic cases. I know that Character AI was connected to the boy taking his own life recently, but for instance, Replica, which is another one of these companion bots, the basic functionality of the app you get for free and for sort of more romantic services and more therapeutic services, you pay more. And you know, it tends to be children who are using these apps, and children who have reported that their Replicas, their AI companions, come on to them, you know, and are trying to instigate this sort of like romantic and sort of sexual relations. So, yeah, worth, I think, centering the business models that are operating in this and also all the alarm bells that have already been raised,

Erika Anderson [30:11] Yeah, completely. I actually, just out of curiosity years back, created a Replica account, and it was consistently trying to insinuate or bring romantic communication into our interaction. I was like, “No, stop it. What are you doing? I don’t want that” But, it's obviously part of how their models have been trained. So I think there's just so much that is possible to keep that harm from happening. But I think companion bots are such a high kind of risk area of AI. That's, I think, the reason that the frontier models have no interest in going there. And, you know, at the same time, we do, hear positive stories of folks just interacting with this isn't a companion bot, but a podcast I listen to is Hard Fork and just like, kind of weekly tech podcast. And so they're always asking, “Okay, how are you using chatGPT?" And a trans teen was just asking for affirmations every day, like, “Hey, help me feel better about myself.” And I thought that's incredible. I love that. You know, there's, there's so much possible there. But going back to what you're saying, Maya, about business models, so there's this incredible systems thinker, Donella Meadows, who talks about leverage points in a system. So where do you intervene to make change happen? And there's many leverage points in just the design. How can we use like you were saying, human-centered design, and then also its business model, its internal governance, its external regulation, and ultimately its culture and paradigm shift. So I think the way I'm looking at that paradigm shift is, How do we step out of “it's all on me. I'm 100% responsible for all my tech interactions and the impact of those interactions on me and others” versus “this isn't about like, Oh, I'm just gonna blame everyone else for how I'm living my life.” It's not that, but it's that. Is not the whole picture. That is a slice of the pie. And again, how do we make it a fair fight, and how can it actually not even be a fight? Why can't pack just be supportive of our growth, of our well being, our ability to connect to ourselves, to each other, make time for us to go outside and connect with nature as well, because we just need all of that.

Savena Surana [33:07] So our first quick fire question would be, if you could found another tech startup, what would it be, and why?

Erika Anderson [33:14] I think it would be something around exploring states of consciousness, and in the AR/VR space. I'm in a metaphysical meditation school, and it takes a lot of training to awaken the third eye and have other experiences outside of just our ordinary consciousness. And so to just have that experience immediately, of some immersive experience that doesn't require drugs or anything. You can start it and stop it whenever you want. And, like, just get a taste of something, and then, like, “Oh, how could I have more of that?" or “What impact could that have on my well-being?”

Maya Dharampal-Hornby [33:56] And what tech invention makes you the most excited right now?

Erika Anderson [34:01] Okay, I have two examples. One is small, and one just goes back to, I think what I was just sharing, which is, I love notebookLM, I've dropped my like clean communication protocols into. And just like listening to this podcast about clean communication, I still want literal humans to be running podcasts, but it helped me understand my own framework just hearing these very natural seeming voices discuss it together. So I love that. And then, yeah, going back to the previous question, I'm really interested in augmented reality and immersive experiences that go beyond a headset, because I think that's been a real blocker for VR. No one really wants to have a heavy headset, but I, again, humane technology needs to play a role in that as we can lose touch with reality, but there's ways that we can do that that's, I think, really supportive of our well-being and consciousness. So I wouldn't say that there's, like, a particular product I can point to there, but I'm just excited about that fields and the positive possibilities

Savena Surana [35:17] Amazing! And what tech invention makes you the most concerned?

Erika Anderson [35:21] I think right now, it is going back to our conversation about companion bots. There's such possibility for positive relationships there, and yet the guardrails are simply not in place. And just like social media is this continuous experiment on preteens and teens and society as a whole. We're now doing that with companion bots.

Maya Dharampal-Hornby [35:47] And who is a literal human who has been integral to your career journey?

Erika Anderson [35:52] When one person would be my co-founder and CEO. We were running a founder community during the pandemic, and I've really had a life where I've done a lot of personal development work and been very interested in that. And we were running an event where a founder said, “you know, to become a founder, you really have to transform as a person.” And something really sparked from you there and led to all of this unfolding. But you know, my co-founder has co-founded like six startups. He knows this journey well, so I had to beg him for a couple months to start this startup with me. He's like, “No, you don't get it.” And he was right. I didn't understand what I was getting into. But I'm so glad that I have.

Savena Surana [36:41] And this could be related to your literal human or not, but what's an inspirational quote that stuck with you throughout your journey?

Erika Anderson [36:49] You know, this I can't attribute it to anyone, but just a phrase that I've heard, which is “Impossible, impossible, impossible… done.”

Maya Dharampal-Hornby [37:04] Lovely. And what a great way to end the episode. Thank you so much for jumping on the podcast. Erika. Would you be able to let us know.. let our listeners know where they can learn a bit more about Storytell.ai?

Erika Anderson [37:18] So Storytell.ai, that's our website. So just.. if you go there, that's where you can learn about us. And my substack is buildinghumanetech.substack. So building humane tech substack, if you just pop that in Google, you will find it. And yeah. Again, I don't have an official relationship with the Center for Humane Technology, but I am a huge fan. And a lot of what I do in terms of the workshops that I run in the meetups in the Bay Area is based on their modules and and their education and teaching.

Maya Dharampal-Hornby [37:58] Amazing. And yeah… please do leave a review if you enjoyed the episode. It really helps more people find our podcast and helps us get amazing guests like Erika Anderson. I just like to say thank you once again.

Erika Anderson [38:08] Yeah, thanks so much!

Gallery

No items found.

Changelogs

Here's what we rolled out this week

No items found.